This guide describes how to deploy a Deckhouse Kubernetes Platform cluster in a private environment with no direct access to the DKP container image registry (registry.deckhouse.io) and to external deb/rpm package repositories used on nodes running supported operating systems.

Note that installing DKP in a private environment is available in the following editions: SE, SE+, EE, CSE Lite (1.67), CSE Pro (1.67).

Private environment specifics

Deploying in a private environment is almost the same as deploying on bare metal.

Key specifics:

- Internet access for applications deployed in the private environment is provided through a proxy server, whose parameters must be specified in the cluster configuration.

- A container registry with DKP container images is deployed separately and must be reachable from within the private environment. The cluster is configured to use it and is granted the required access permissions.

All interactions with external resources are performed via a dedicated physical server or virtual machine called a Bastion host. The container registry and proxy server are deployed on the Bastion host, and all cluster management operations are performed from it.

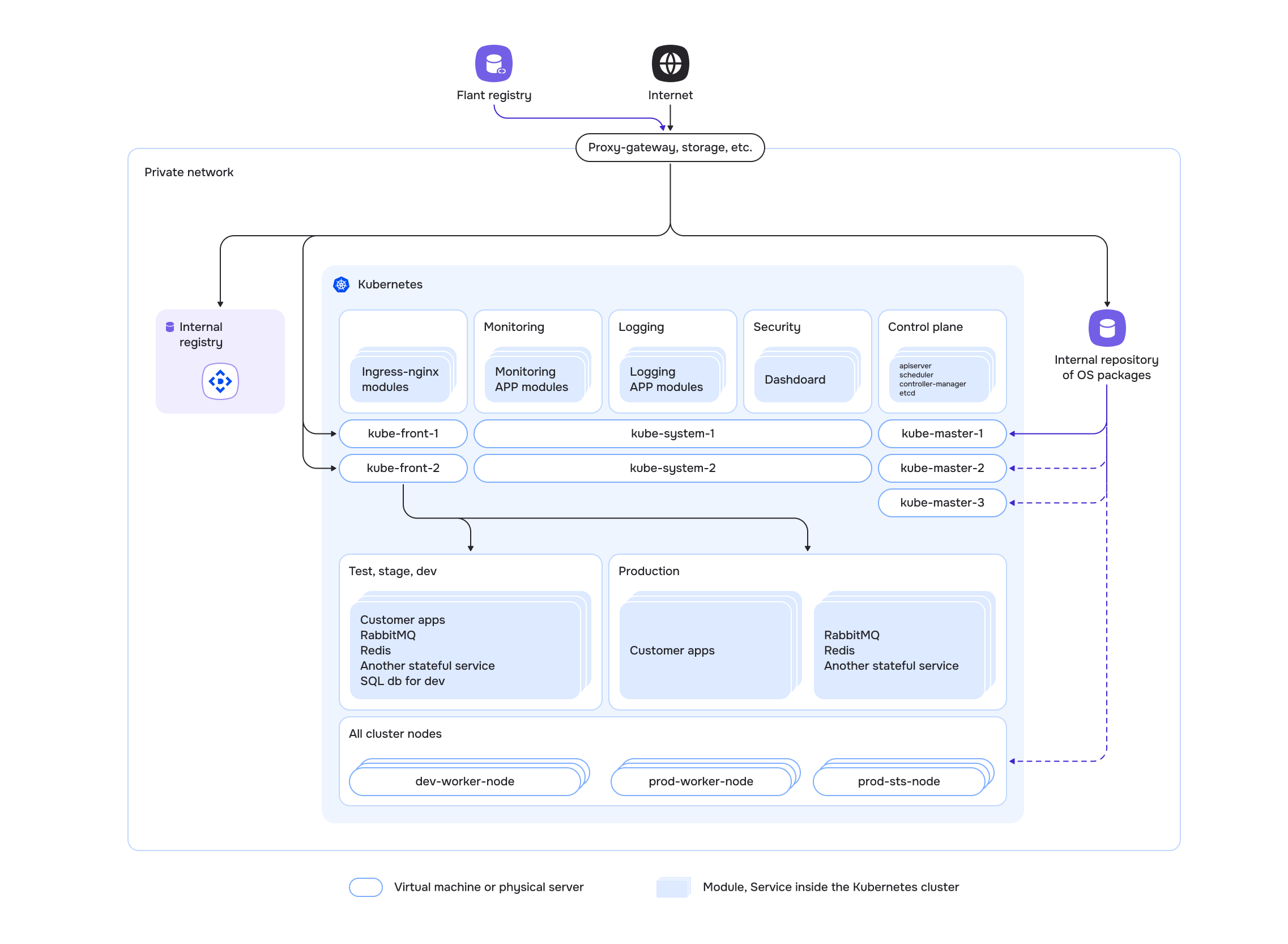

Overall private environment diagram:

The diagram also shows an internal OS package repository. It is required to install curl on the future cluster nodes if access to the official repositories is not available even through the proxy server.

Infrastructure selection

This guide describes deploying a cluster in a private environment consisting of one master node and one worker node.

To perform the deployment, you will need:

- a personal computer from which the operations will be performed;

- a dedicated physical server or virtual machine (Bastion host) where the container registry and related components will be deployed;

- two physical servers or two virtual machines for the cluster nodes.

Server requirements:

- Bastion: at least 4 CPU cores, 8 GB RAM, and 150 GB on fast storage. This capacity is required because the Bastion host stores all DKP images needed for installation. Before being pushed to the private registry, the images are pulled from the public DKP registry to the Bastion host.

- Cluster nodes: the resources for the future cluster nodes should be selected based on the expected workload. For example, the minimum recommended configuration is 4 CPU cores, 8 GB RAM, and 60 GB on fast storage (400+ IOPS) per node.

Preparing a private container registry

DKP supports only the Bearer token authentication scheme for container registries.

Compatibility has been tested and is guaranteed for the following container registries: Nexus, Harbor, Artifactory, Docker Registry, and Quay.

Installing Harbor

In this guide, Harbor is used as the private registry. It supports policy configuration and role-based access control (RBAC), scans images for vulnerabilities, and allows you to mark trusted artifacts. Harbor is a CNCF project.

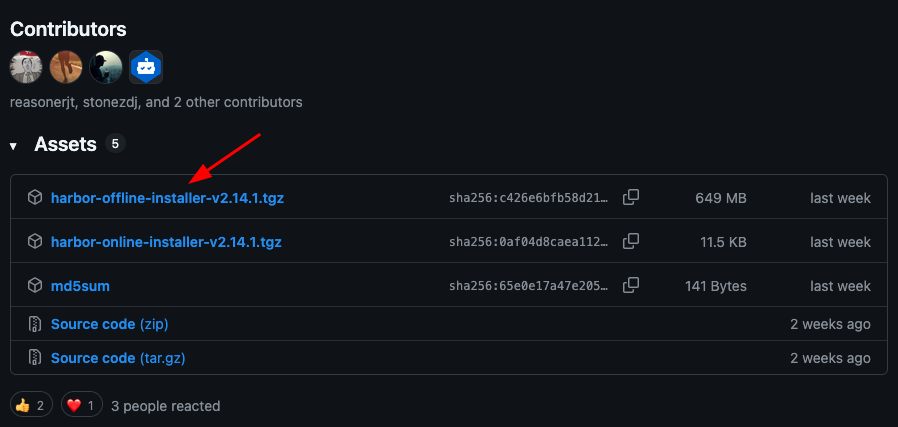

Install the latest Harbor release from the project’s GitHub releases page. Download the installer archive from the desired release, selecting the asset with harbor-offline-installer in its name.

Copy the download URL. For example, for harbor-offline-installer-v2.14.1.tgz it will look like this: https://github.com/goharbor/harbor/releases/download/v2.14.1/harbor-offline-installer-v2.14.1.tgz.

Connect to the Bastion host via SSH and download the archive using any convenient method.

Extract the downloaded archive (specify the archive name):

tar -zxf ./harbor-offline-installer-v2.14.1.tgz

The extracted harbor directory contains the files required for installation.

Before deploying the registry, generate a self-signed TLS certificate.

Due to access restrictions in a private environment, it is not possible to obtain certificates from services such as Let’s Encrypt, since the service will not be able to perform the validation required to issue a certificate.

There are several ways to generate certificates. This guide describes one of them. If needed, use any other suitable approach or provide an existing certificate.

Create the certs directory inside the harbor directory:

cd harbor/

mkdir certs

Generate certificates for external access using the following commands:

openssl ecparam -name prime256v1 -genkey -out ca.key 4096

openssl req -x509 -new -nodes -sha512 -days 3650 -subj "/C=US/ST=California/L=SanFrancisco/O=example/OU=Personal/CN=myca.local" -key ca.key -out ca.crt

Generate certificates for the internal domain name harbor.local so that the Bastion host can be accessed securely from within the private network:

openssl ecparam -name prime256v1 -genkey -out harbor.local.key

openssl req -sha512 -new -subj "/C=US/ST=California/L=SanFrancisco/O=example/OU=Personal/CN=harbor.local" -key harbor.local.key -out harbor.local.csr

cat > v3.ext <<-EOF

authorityKeyIdentifier=keyid, issuer

basicConstraints=CA:FALSE

keyUsage = digitalSignature, nonRepudiation, keyEncipherment, dataEncipherment

extendedKeyUsage = serverAuth

subjectAltName = @alt_names

[alt_names]

IP.1=<INTERNAL_IP_ADDRESS>

DNS.1=harbor.local

EOF

Do not forget to replace <INTERNAL_IP_ADDRESS> with the Bastion host’s internal IP address. This address will be used to access the container registry from within the private network. The harbor.local domain name is also associated with this address.

openssl x509 -req -sha512 -days 3650 -extfile v3.ext -CA ca.crt -CAkey ca.key -CAcreateserial -in harbor.local.csr -out harbor.local.crt

openssl x509 -inform PEM -in harbor.local.crt -out harbor.local.cert

Verify that all certificates were created successfully:

ls -la

Next, configure Docker to work with the private container registry over TLS. To do this, create the harbor.local directory under /etc/docker/certs.d/:

sudo mkdir -p /etc/docker/certs.d/harbor.local

The

-poption tellsmkdirto create parent directories if they do not exist (in this case, thecerts.ddirectory).

Copy the generated certificates into it:

cp ca.crt /etc/docker/certs.d/harbor.local/

cp harbor.local.cert /etc/docker/certs.d/harbor.local/

cp harbor.local.key /etc/docker/certs.d/harbor.local/

These certificates will be used when accessing the registry via the harbor.local domain name.

Copy the configuration file template that comes with the installer:

cp harbor.yml.tmpl harbor.yml

Update the following parameters in harbor.yml:

hostname: set toharbor.local(the certificates were generated for this name);certificate: specify the path to the generated certificate in thecertsdirectory (for example,/home/ubuntu/harbor/certs/harbor.local.crt);private_key: specify the path to the private key (for example,/home/ubuntu/harbor/certs/harbor.local.key);harbor_admin_password: set a password for accessing the web UI.

Save the file.

Install Docker and the Docker Compose plugin on the Bastion host.

Run the installation script:

./install.sh

Harbor installation will start: the required images will be prepared and the containers will be started.

Verify that Harbor is running successfully:

docker ps

Add an entry to /etc/hosts that maps the harbor.local domain name to the Bastion host’s localhost address so that you can access Harbor by this name from the Bastion host itself:

127.0.0.1 localhost harbor.local

In some cloud providers (for example, Yandex Cloud), changes to /etc/hosts may be reverted after a virtual machine reboot. A note about this is typically shown at the beginning of the /etc/hosts file.

# Your system has configured 'manage_etc_hosts' as True.

# As a result, if you wish for changes to this file to persist

# then you will need to either

# a.) make changes to the master file in /etc/cloud/templates/hosts.debian.tmpl

# b.) change or remove the value of 'manage_etc_hosts' in

# /etc/cloud/cloud.cfg or cloud-config from user-data

If your provider uses the same mechanism, apply the corresponding changes to the template file referenced in the comment so that the settings persist after reboot.

Harbor installation is now complete! 🎉

Configuring Harbor

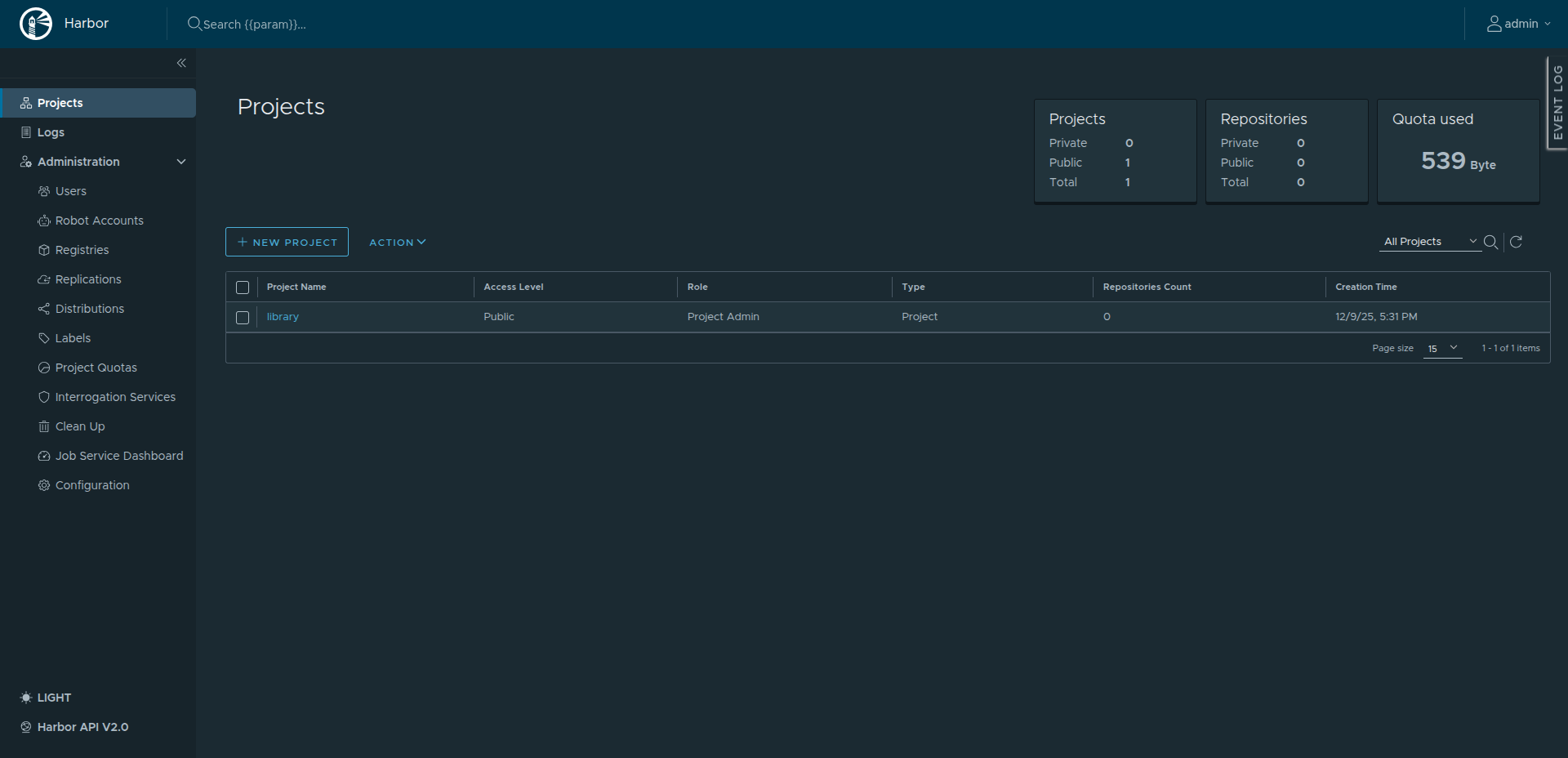

Create a project and a user that will be used to work with this project.

Open the Harbor web UI at harbor.local:

To access Harbor by the harbor.local domain name from your workstation, add a corresponding entry to /etc/hosts and point it to the Bastion host IP address.

To sign in, use the username and password specified in the harbor.yml configuration file.

Your browser may warn about the self-signed certificate and mark the connection as “not secure”. In a private environment this is expected and acceptable. If needed, add the certificate to the trusted certificate store of your browser or operating system to remove the warning.

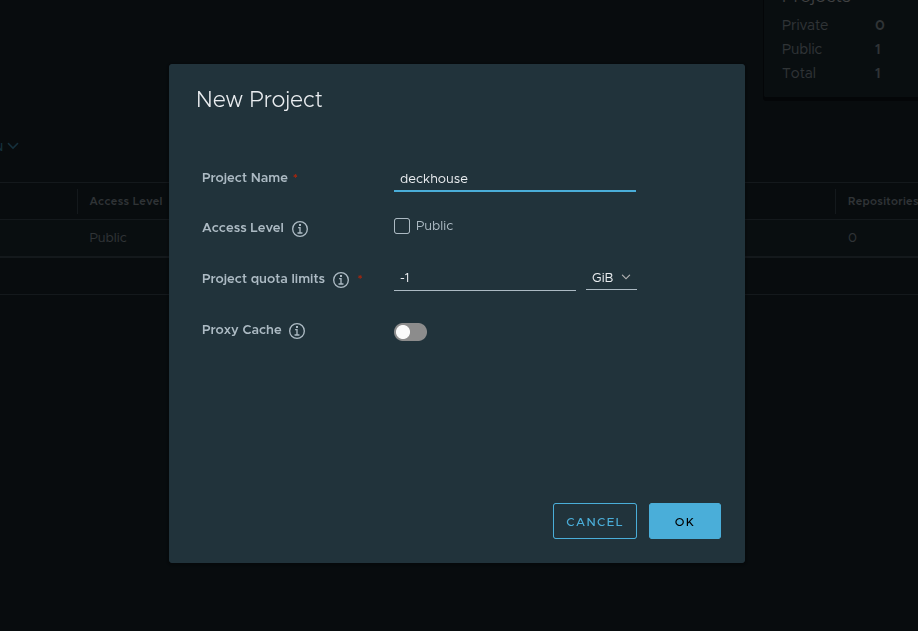

Create a new project. Click New Project and enter deckhouse as the project name. Leave the remaining settings unchanged.

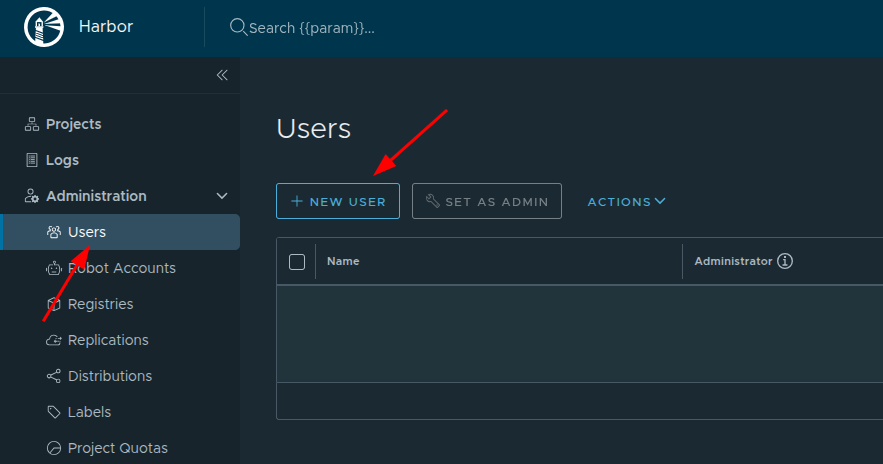

Create a new user for this project. In the left menu, go to Users and click New User:

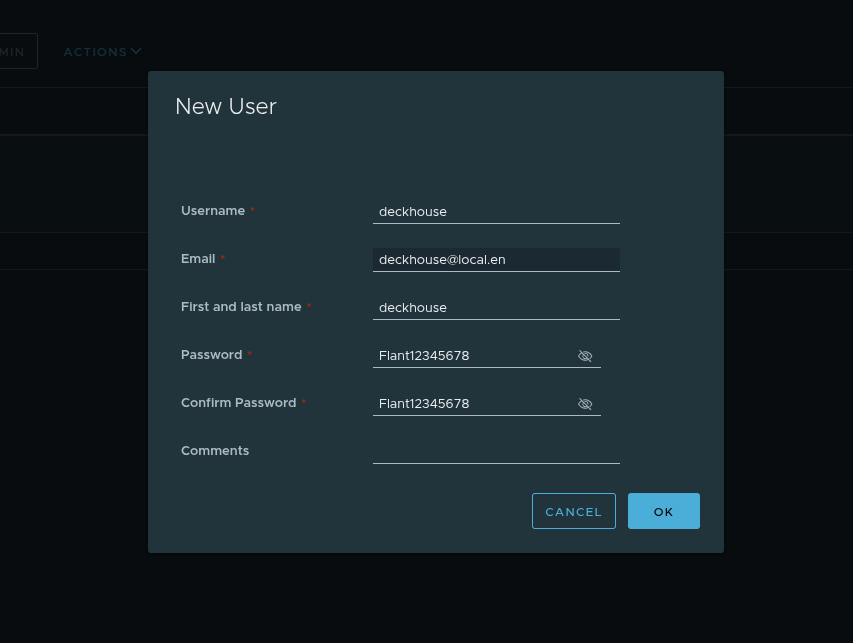

Specify the username, email address, and password:

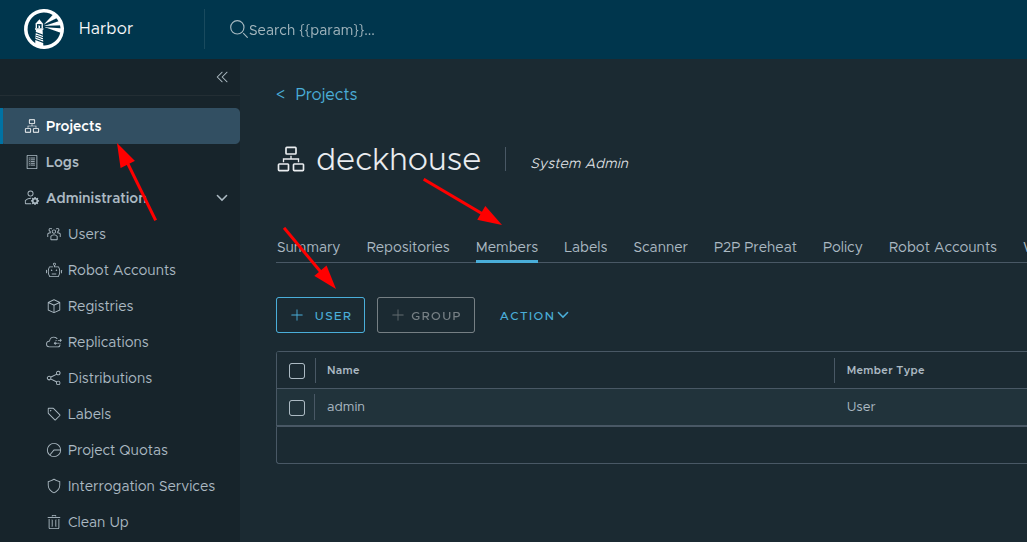

Add the created user to the deckhouse project: go back to Projects, open the deckhouse project, then open the Members tab and click User to add a member.

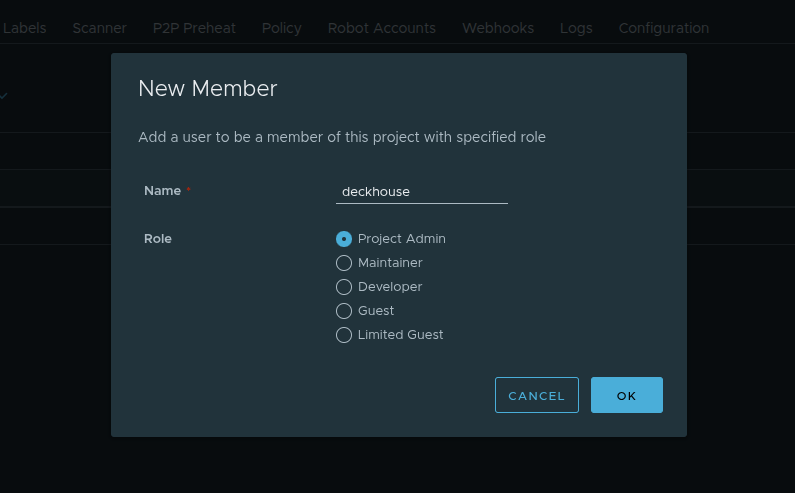

Keep the default role: Project Admin.

Harbor configuration is now complete! 🎉

Copying DKP images to a private container registry

The next step is to copy DKP component images from the public Deckhouse Kubernetes Platform registry to Harbor.

To proceed with the steps in this section, you need the Deckhouse CLI utility. Install it on the Bastion host according to the documentation.

Downloading images takes a significant amount of time. To avoid losing progress if the SSH connection is interrupted, run the commands in a tmux or screen session. If the connection drops, you can reattach to the session and continue without starting over. Both utilities are typically available in Linux distribution repositories and can be installed using the package manager.

Run the following command to download all required images and pack them into the d8.tar archive. Specify your license key and the DKP edition (for example, se-plus for Standard Edition+, ee for Enterprise Edition, and so on):

d8 mirror pull --source="registry.deckhouse.io/deckhouse/<DKP_EDITION>" --license="<LICENSE_KEY>" $(pwd)/d8.tar

Depending on your Internet connection speed, the process may take 30 to 40 minutes.

Verify that the archive has been created:

$ ls -lh

total 650M

drwxr-xr-x 2 ubuntu ubuntu 4.0K Dec 11 15:08 d8.tar

Push the downloaded images to the private registry (specify the DKP edition and the credentials of the user created in Harbor):

d8 mirror push $(pwd)/d8.tar 'harbor.local:443/deckhouse/<DKP_EDITION>' --registry-login='deckhouse' --registry-password='<PASSWORD>' --tls-skip-verify

The

--tls-skip-verifyflag tells the utility to trust the registry certificate and skip its verification.

The archive will be unpacked and the images will be pushed to the registry. This step is usually faster than downloading because it operates on a local archive. It typically takes about 15 minutes.

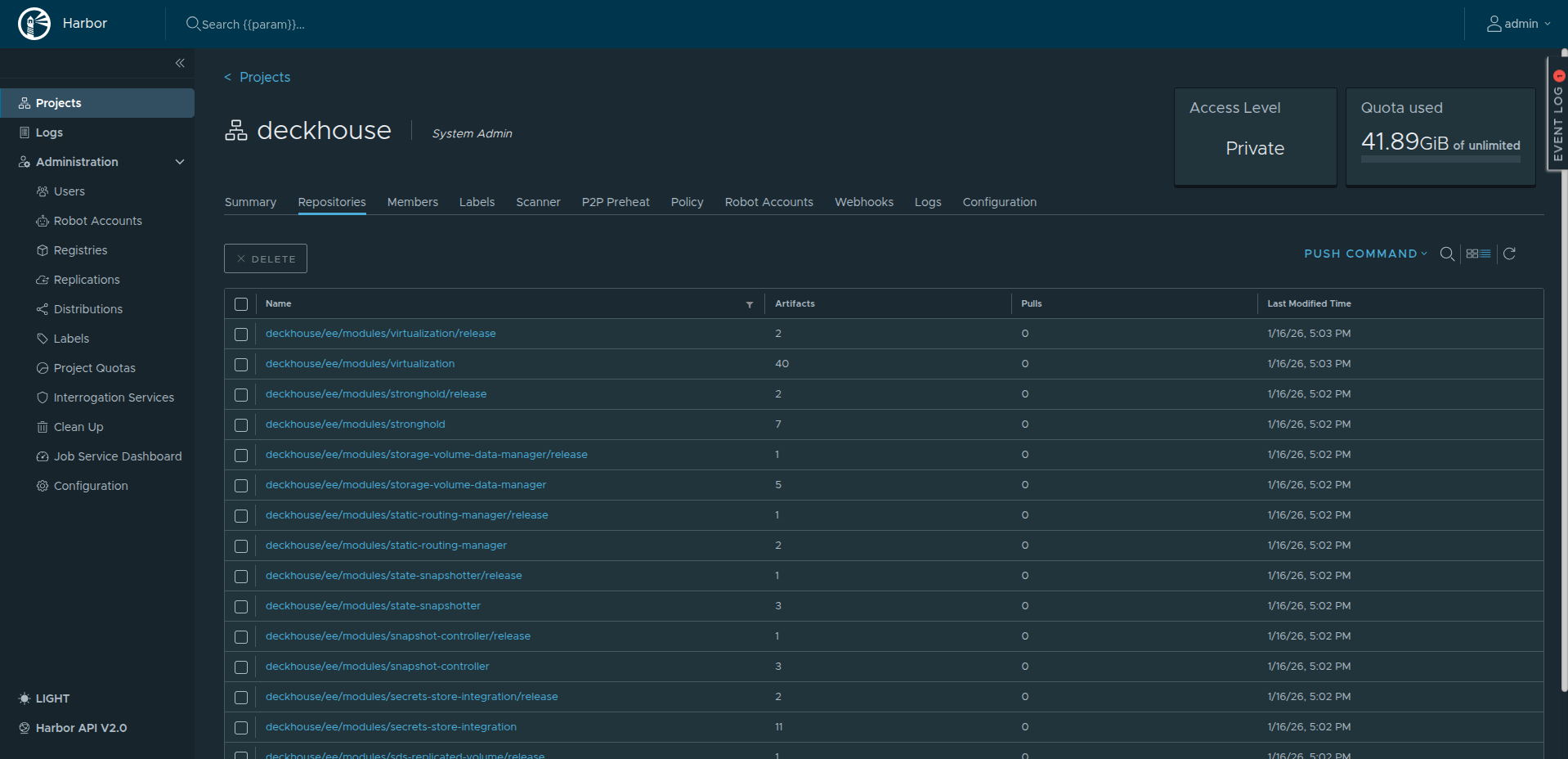

To verify that the images have been pushed, open the deckhouse project in the Harbor web UI.

The images are now available and ready to use! 🎉

Installing a proxy server

To allow the future cluster nodes located in the private environment to access external package repositories (to install packages required for DKP), deploy a proxy server on the Bastion host through which this access will be provided.

You can use any proxy server that meets your requirements. In this example, we will use Squid.

Deploy Squid on the Bastion host as a container:

docker run -d --name squid -p 3128:3128 ubuntu/squid

Verify that the container is running:

059b21fddbd2 ubuntu/squid "entrypoint.sh -f /e…" About a minute ago Up About a minute 0.0.0.0:3128->3128/tcp, [::]:3128->3128/tcp squid

The list of running containers must include a container named squid.

Signing in to the registry to run the installer

Sign in to the Harbor registry so that Docker can pull the dhctl installer image from it:

docker login harbor.local

Preparing VMs for the future nodes

VM requirements

During installation, ContainerdV2 is used as the default container runtime on cluster nodes. To use it, the nodes must meet the following requirements:

CgroupsV2support;- systemd version

244; - support for the

erofskernel module.

Some distributions do not meet these requirements. In this case, you must bring the OS on the nodes into compliance before installing Deckhouse Kubernetes Platform. For details, see the documentation.

Servers intended for future cluster nodes must meet the following requirements:

- at least 4 CPU cores;

- at least 8 GB RAM;

- at least 60 GB of disk space on fast storage (400+ IOPS);

- a supported OS;

- Linux kernel version

5.8or later; - a unique hostname across all cluster servers (physical servers and virtual machines);

- one of the package managers available (

apt/apt-get,yum, orrpm).

For proper resource sizing, refer to the production preparation recommendations and the hardware requirements guide for selecting node types, the number of nodes, and node resources based on your expected workload and operational requirements.

Configuring access to the Bastion host

To allow the servers where the master and worker nodes will be deployed to access the private registry, configure them to resolve the harbor.local domain name to the Bastion host’s internal IP address in the private network.

To do this, connect to each server one by one and add an entry to /etc/hosts (and, if necessary, also to the cloud template file if your provider manages /etc/hosts).

<INTERNAL-IP-ADDRESS> harbor.local proxy.local

Do not forget to replace

<INTERNAL-IP-ADDRESS>with the Bastion host’s actual internal IP address.

Creating a user for the master node

To install DKP, create a user on the future master node that will be used to connect to the node and perform the platform installation.

Run the commands as root (substitute the public part of your SSH key):

useradd deckhouse -m -s /bin/bash -G sudo

echo 'deckhouse ALL=(ALL) NOPASSWD: ALL' | sudo EDITOR='tee -a' visudo

mkdir /home/deckhouse/.ssh

export KEY='ssh-ed25519 AAAAB3NzaC1yc2EAAAADA...'

echo $KEY >> /home/deckhouse/.ssh/authorized_keys

chown -R deckhouse:deckhouse /home/deckhouse

chmod 700 /home/deckhouse/.ssh

chmod 600 /home/deckhouse/.ssh/authorized_keys

As a result of these commands:

- a new

deckhouseuser is created and added to thesudogroup; - passwordless privilege escalation is configured;

- the public SSH key is added so you can log in to the server as this user.

Verify that you can connect as the new user:

ssh -J ubuntu@<BASTION_IP> deckhouse@<NODE_IP>

If the login succeeds, the user has been created correctly.

Preparing the configuration file

The configuration file for deployment in a private environment differs from the configuration used for bare metal installation in a few parameters. Take the config.yml file from step 4 of the bare metal installation guide and make the following changes:

-

In the

deckhousesection of theClusterConfigurationblock, change the container registry settings from the public Deckhouse Kubernetes Platform registry to your private registry:# Proxy server settings. proxy: httpProxy: http://proxy.local:3128 httpsProxy: https://proxy.local:3128 noProxy: ["harbor.local", "proxy.local", "10.128.0.8", "10.128.0.32", "10.128.0.18"]The following parameters are specified here:

- the HTTP and HTTPS proxy server addresses deployed on the Bastion host;

- the list of domains and IP addresses that will not be routed through the proxy server (internal domain names and internal IP addresses of all servers).

-

In the

InitConfigurationsection, add the parameters required to access the registry:deckhouse: # Docker registry address that hosts Deckhouse images (specify the DKP edition). imagesRepo: harbor.local/deckhouse/<DKP_EDITION> # Base64-encoded Docker registry credentials. # You can obtain it by running: `cat .docker/config.json | base64`. registryDockerCfg: <DOCKER_CFG_BASE64> # Registry access scheme (HTTP or HTTPS). registryScheme: HTTPS # The root CA certificate created earlier. # You can obtain it by running: `cat harbor/certs/ca.crt`. registryCA: | -----BEGIN CERTIFICATE----- ... -----END CERTIFICATE----- - In the releaseChannel parameter of the

deckhouseModuleConfig, set the value toStableto use the stable update channel. -

In the global ModuleConfig, configure the use of self-signed certificates for cluster components and specify the domain name template for system applications using the

publicDomainTemplateparameter:settings: modules: # A template used to construct the addresses of system applications in the cluster. # For example, with %s.example.com, Grafana will be available at 'grafana.example.com'. # The domain MUST NOT match the value specified in the clusterDomain parameter of the ClusterConfiguration resource. # You can set your own value right away, or follow the guide and change it after installation. publicDomainTemplate: "%s.test.local" # The HTTPS implementation method used by Deckhouse modules. https: certManager: # Use self-signed certificates for Deckhouse modules. clusterIssuerName: selfsigned -

In the

user-authnModuleConfig, set the dexCAMode parameter toFromIngressSecret:settings: controlPlaneConfigurator: dexCAMode: FromIngressSecret -

Enable and configure the cert-manager module and disable the use of Let’s Encrypt:

apiVersion: deckhouse.io/v1alpha1 kind: ModuleConfig metadata: name: cert-manager spec: version: 1 enabled: true settings: disableLetsencrypt: true -

In the internalNetworkCIDRs parameter of StaticClusterConfiguration, specify the subnet for the cluster nodes’ internal IP addresses. For example:

internalNetworkCIDRs: - 10.128.0.0/24

The installation configuration file is ready.

Installing DKP

Copy the prepared configuration file to the Bastion host (for example, to ~/deckhouse). Change to that directory and start the installer using the following command:

docker run --pull=always -it -v "$PWD/config.yml:/config.yml" -v "$HOME/.ssh/:/tmp/.ssh/" --network=host -v "$PWD/dhctl-tmp:/tmp/dhctl" harbor.local/deckhouse/<DKP_EDITION>/install:stable bash

If there is no local DNS server in the internal network and the domain names are configured in the Bastion host’s /etc/hosts, make sure to specify --network=host so that Docker can use those name resolutions.

After the image is pulled and the container starts successfully, you will see a shell prompt inside the container:

[deckhouse] root@guide-bastion / #

Start the DKP installation with the following command (specify the master node’s internal IP address):

dhctl bootstrap --ssh-user=deckhouse --ssh-host=<master_ip> --ssh-agent-private-keys=/tmp/.ssh/<SSH_PRIVATE_KEY_FILE> \

--config=/config.yml \

--ask-become-pass

Replace

<SSH_PRIVATE_KEY_FILE>here with the name of your private key. For example, for a key with RSA encryption it can beid_rsa, and for a key with ED25519 encryption it can beid_ed25519.

The installation process may take up to 30 minutes depending on the network speed.

If the installation completes successfully, you will see the following message:

┌ ⛵ ~ Bootstrap: Run post bootstrap actions

│ ┌ Set release channel to deckhouse module config

│ │ 🎉 Succeeded!

│ └ Set release channel to deckhouse module config (0.09 seconds)

└ ⛵ ~ Bootstrap: Run post bootstrap actions (0.09 seconds)

┌ ⛵ ~ Bootstrap: Clear cache

│ ❗ ~ Next run of "dhctl bootstrap" will create a new Kubernetes cluster.

└ ⛵ ~ Bootstrap: Clear cache (0.00 seconds)

🎉 Deckhouse cluster was created successfully!

Adding nodes to the cluster

Add a node to the cluster (for details on adding a static node, see the documentation).

To do this, follow these steps:

-

Configure a StorageClass for local storage by running the following command on the master node:

sudo -i d8 k create -f - << EOF apiVersion: deckhouse.io/v1alpha1 kind: LocalPathProvisioner metadata: name: localpath spec: path: "/opt/local-path-provisioner" reclaimPolicy: Delete EOF -

Set the created StorageClass as the default StorageClass. To do this, run the following command on the master node:

sudo -i d8 k patch mc global --type merge \ -p "{\"spec\": {\"settings\":{\"defaultClusterStorageClass\":\"localpath\"}}}" -

Create the

workerNodeGroup and add a node using Cluster API Provider Static (CAPS):sudo -i d8 k create -f - <<EOF apiVersion: deckhouse.io/v1 kind: NodeGroup metadata: name: worker spec: nodeType: Static staticInstances: count: 1 labelSelector: matchLabels: role: worker EOF -

Generate an SSH key with an empty passphrase. Run the following command on the master node:

ssh-keygen -t ed25519 -f /dev/shm/caps-id -C "" -N "" -

Create an SSHCredentials resource in the cluster. To do this, run the following command on the master node:

sudo -i d8 k create -f - <<EOF apiVersion: deckhouse.io/v1alpha2 kind: SSHCredentials metadata: name: caps spec: user: caps privateSSHKey: "`cat /dev/shm/caps-id | base64 -w0`" EOF -

Print the public part of the SSH key generated earlier (you will need it in the next step). Run the following command on the master node:

cat /dev/shm/caps-id.pub -

On the prepared server for the worker node, create the

capsuser. Run the following command, specifying the public SSH key obtained in the previous step:# Specify the public part of the user's SSH key. export KEY='<SSH-PUBLIC-KEY>' useradd -m -s /bin/bash caps usermod -aG sudo caps echo 'caps ALL=(ALL) NOPASSWD: ALL' | sudo EDITOR='tee -a' visudo mkdir /home/caps/.ssh echo $KEY >> /home/caps/.ssh/authorized_keys chown -R caps:caps /home/caps chmod 700 /home/caps/.ssh chmod 600 /home/caps/.ssh/authorized_keys

-

Create a StaticInstance for the node being added. Run the following command on the master node, specifying the IP address of the node you want to add:

# Specify the IP address of the node to be added to the cluster. export NODE=<NODE-IP-ADDRESS> sudo -i d8 k create -f - <<EOF apiVersion: deckhouse.io/v1alpha2 kind: StaticInstance metadata: name: d8cluster-worker labels: role: worker spec: address: "$NODE" credentialsRef: kind: SSHCredentials name: caps EOF -

Make sure all cluster nodes are in the

Readystatus:$ sudo -i d8 k get no NAME STATUS ROLES AGE VERSION d8cluster Ready control-plane,master 30m v1.23.17 d8cluster-worker Ready worker 10m v1.23.17It may take some time for all DKP components to start after the installation completes.

Configuring the Ingress controller and creating a user

Installing the ingress controller

Make sure the Kruise controller manager Pod of the ingress-nginx module is running and in the Running status. To do this, run the following command on the master node:

$ sudo -i d8 k -n d8-ingress-nginx get po -l app=kruise

NAME READY STATUS RESTARTS AGE

kruise-controller-manager-7dfcbdc549-b4wk7 3/3 Running 0 15m

Create the ingress-nginx-controller.yml file on the master node containing the Ingress controller configuration:

# NGINX Ingress controller parameters.

# https://deckhouse.io/modules/ingress-nginx/cr.html

apiVersion: deckhouse.io/v1

kind: IngressNginxController

metadata:

name: nginx

spec:

# The name of the IngressClass served by the NGINX Ingress controller.

ingressClass: nginx

# How traffic enters from outside the cluster.

inlet: HostPort

hostPort:

httpPort: 80

httpsPort: 443

# Defines which nodes will run the component.

# You may want to adjust this.

nodeSelector:

node-role.kubernetes.io/control-plane: ""

tolerations:

- effect: NoSchedule

key: node-role.kubernetes.io/control-plane

operator: Exists

Apply it by running the following command on the master node:

sudo -i d8 k create -f $PWD/ingress-nginx-controller.yml

Starting the Ingress controller after DKP installation may take some time. Before you proceed, make sure the Ingress controller is running (run the following command on the master node):

$ sudo -i d8 k -n d8-ingress-nginx get po -l app=controller

NAME READY STATUS RESTARTS AGE

controller-nginx-r6hxc 3/3 Running 0 5m

Creating a user to access the cluster web-interface

Create the user.yml file on the master node containing the user account definition and access rights:

# RBAC and authorization settings.

# https://deckhouse.io/modules/user-authz/cr.html#clusterauthorizationrule

apiVersion: deckhouse.io/v1

kind: ClusterAuthorizationRule

metadata:

name: admin

spec:

# List of Kubernetes RBAC subjects.

subjects:

- kind: User

name: admin@deckhouse.io

# A predefined access level template.

accessLevel: SuperAdmin

# Allow the user to use kubectl port-forward.

portForwarding: true

---

# Static user data.

# https://deckhouse.io/modules/user-authn/cr.html#user

apiVersion: deckhouse.io/v1

kind: User

metadata:

name: admin

spec:

# User email.

email: admin@deckhouse.io

# This is the password hash for 3xqgv2auys, generated just now.

# Generate your own or use this one for testing purposes only:

# echo -n '3xqgv2auys' | htpasswd -BinC 10 "" | cut -d: -f2 | tr -d '\n' | base64 -w0; echo

# You may want to change it.

password: 'JDJhJDEwJGtsWERBY1lxMUVLQjVJVXoxVkNrSU8xVEI1a0xZYnJNWm16NmtOeng5VlI2RHBQZDZhbjJH'

Apply it by running the following command on the master node:

sudo -i d8 k create -f $PWD/user.yml

Configuring DNS records

To access the cluster web UIs, configure the following domain names to resolve to the master node’s internal IP address (use DNS names according to the template specified in the publicDomainTemplate parameter). Example for the %s.example.com DNS name template:

api.example.com

code.example.com

commander.example.com

console.example.com

dex.example.com

documentation.example.com

grafana.example.com

hubble.example.com

istio.example.com

istio-api-proxy.example.com

kubeconfig.example.com

openvpn-admin.example.com

prometheus.example.com

status.example.com

tools.example.com

upmeter.example.com

You can do this either on an internal DNS server or by adding the mappings to /etc/hosts on the required computers.

To verify that the cluster has been deployed correctly and is functioning properly, open the Grafana web UI, which shows the cluster status. The Grafana address is generated from the publicDomainTemplate value. For example, if %s.example.com is used, Grafana will be available at grafana.example.com. Sign in using the credentials of the user you created earlier.