How do I find out all Deckhouse parameters?

Deckhouse is configured using global settings, module settings, and various custom resources. Read more in the documentation.

-

Display global Deckhouse settings:

d8 k get mc global -o yaml -

List the status of all modules (available for Deckhouse version 1.47+):

d8 k get modules -

Display the settings of the

user-authnmodule configuration:d8 k get moduleconfigs user-authn -o yaml

How do I find the documentation for the version installed?

The documentation for the Deckhouse version running in the cluster is available at documentation.<cluster_domain>, where <cluster_domain> is the DNS name that matches the template defined in the modules.publicDomainTemplate parameter.

Documentation is available when the documentation module is enabled. It is enabled by default except the Minimal bundle.

Deckhouse update

How to find out in which mode the cluster is being updated?

You can view the cluster update mode in the configuration of the deckhouse module. To do this, run the following command:

d8 k get mc deckhouse -oyaml

Example of the output:

apiVersion: deckhouse.io/v1alpha1

kind: ModuleConfig

metadata:

creationTimestamp: "2022-12-14T11:13:03Z"

generation: 1

name: deckhouse

resourceVersion: "3258626079"

uid: c64a2532-af0d-496b-b4b7-eafb5d9a56ee

spec:

settings:

releaseChannel: Stable

update:

windows:

- days:

- Mon

from: "19:00"

to: "20:00"

version: 1

status:

state: Enabled

status: ""

type: Embedded

version: "1"

There are three possible update modes:

- Automatic + update windows are not set. The cluster will be updated after the new version appears on the corresponding release channel.

- Automatic + update windows are set. The cluster will be updated in the nearest available window after the new version appears on the release channel.

- Manual. Manual action is required to apply the update.

How do I set the desired release channel?

Change (set) the releaseChannel parameter in the deckhouse module configuration to automatically switch to another release channel.

It will activate the mechanism of automatic stabilization of the release channel.

Here is an example of the deckhouse module configuration with the Stable release channel:

apiVersion: deckhouse.io/v1alpha1

kind: ModuleConfig

metadata:

name: deckhouse

spec:

version: 1

settings:

releaseChannel: Stable

How do I disable automatic updates?

To completely disable the Deckhouse update mechanism, remove the releaseChannel parameter in the deckhouse module configuration.

In this case, Deckhouse does not check for updates and doesn’t apply patch releases.

It is highly not recommended to disable automatic updates! It will block updates to patch releases that may contain critical vulnerabilities and bugs fixes.

How do I apply an update without having to wait for the update window, canary-release and manual update mode?

To apply an update immediately, set the release.deckhouse.io/apply-now : "true" annotation on the DeckhouseRelease resource.

Caution! In this case, the update windows, settings canary-release and manual cluster update mode will be ignored. The update will be applied immediately after the annotation is installed.

An example of a command to set the annotation to skip the update windows for version v1.56.2:

d8 k annotate deckhousereleases v1.56.2 release.deckhouse.io/apply-now="true"

An example of a resource with the update window skipping annotation in place:

apiVersion: deckhouse.io/v1alpha1

kind: DeckhouseRelease

metadata:

annotations:

release.deckhouse.io/apply-now: "true"

...

How to understand what changes the update contains and how it will affect the cluster?

You can find all the information about Deckhouse versions in the list of Deckhouse releases.

Summary information about important changes, component version updates, and which components in the cluster will be restarted during the update process can be found in the description of the zero patch version of the release. For example, v1.46.0 for the v1.46 Deckhouse release.

A detailed list of changes can be found in the Changelog, which is referenced in each release.

How do I understand that the cluster is being updated?

During the update:

- The

DeckhouseUpdatingalert is displayed. - The

deckhousePod is not theReadystatus. If the Pod does not go to theReadystatus for a long time, then this may indicate that there are problems in the work of Deckhouse. Diagnosis is necessary.

How do I know that the update was successful?

If the DeckhouseUpdating alert is resolved, then the update is complete.

You can also check the status of Deckhouse releases by running the following command:

d8 k get deckhouserelease

Example output:

NAME PHASE TRANSITIONTIME MESSAGE

v1.46.8 Superseded 13d

v1.46.9 Superseded 11d

v1.47.0 Superseded 4h12m

v1.47.1 Deployed 4h12m

The Deployed status of the corresponding version indicates that the switch to the corresponding version was performed (but this does not mean that it ended successfully).

Check the status of the Deckhouse Pod:

d8 k -n d8-system get pods -l app=deckhouse

Example output:

NAME READY STATUS RESTARTS AGE

deckhouse-7844b47bcd-qtbx9 1/1 Running 0 1d

- If the status of the Pod is

Running, and1/1indicated in the READY column, the update was completed successfully. - If the status of the Pod is

Running, and0/1indicated in the READY column, the update is not over yet. If this goes on for more than 20-30 minutes, then this may indicate that there are problems in the work of Deckhouse. Diagnosis is necessary. - If the status of the Pod is not

Running, then this may indicate that there are problems in the work of Deckhouse. Diagnosis is necessary.

Possible options for action if something went wrong:

-

Check Deckhouse logs using the following command:

d8 k -n d8-system logs -f -l app=deckhouse | jq -Rr 'fromjson? | .msg' - Collect debugging information and contact technical support.

- Ask for help from the community.

How do I know that a new version is available for the cluster?

As soon as a new version of Deckhouse appears on the release channel installed in the cluster:

- The alert

DeckhouseReleaseIsWaitingManualApprovalfires, if the cluster uses manual update mode (the update.mode parameter is set toManual). - There is a new custom resource DeckhouseRelease. Use the

d8 k get deckhousereleasescommand, to view the list of releases. If theDeckhouseReleaseis in thePendingstate, the specified version has not yet been installed. Possible reasons whyDeckhouseReleasemay be inPending:- Manual update mode is set (the update.mode parameter is set to

Manual). - The automatic update mode is set, and the update windows are configured, the interval of which has not yet come.

- The automatic update mode is set, update windows are not configured, but the installation of the version has been postponed for a random time due to the mechanism of reducing the load on the repository of container images. There will be a corresponding message in the

status.messagefield of theDeckhouseReleaseresource. - The update.notification.minimalNotificationTime parameter is set, and the specified time has not passed yet.

- Manual update mode is set (the update.mode parameter is set to

How do I get information about the upcoming update in advance?

You can get information in advance about updating minor versions of Deckhouse on the release channel in the following ways:

- Configure manual update mode. In this case, when a new version appears on the release channel, the alert

DeckhouseReleaseIsWaitingManualApprovalwill be displayed and a new custom resource DeckhouseRelease will be applied in the cluster. - Configure automatic update mode and specify the minimum time in the minimalNotificationTime parameter for which the update will be postponed. In this case, when a new version appears on the release channel, a new custom resource DeckhouseRelease will appear in the cluster. And if you specify a URL in the update.notification.webhook parameter, then the webhook will be called additionally.

How do I find out which version of Deckhouse is on which release channel?

Information about which version of Deckhouse is on which release channel can be obtained at https://releases.deckhouse.io.

How does automatic Deckhouse update work?

Every minute Deckhouse checks a new release appeared in the release channel specified by the releaseChannel parameter.

When a new release appears on the release channel, Deckhouse downloads it and creates CustomResource DeckhouseRelease.

After creating a DeckhouseRelease custom resource in a cluster, Deckhouse updates the deckhouse Deployment and sets the image tag to a specified release tag according to selected update mode and update windows (automatic at any time by default).

To get list and status of all releases use the following command:

d8 k get deckhousereleases

Starting from DKP 1.70 patch releases (e.g., an update from version 1.70.1 to version 1.70.2) are installed taking into account the update windows. Prior to DKP 1.70, patch version updates ignore update windows settings and apply as soon as they are available.

What happens when the release channel changes?

- When switching to a more stable release channel (e.g., from

AlphatoEarlyAccess), Deckhouse downloads release data from the release channel (theEarlyAccessrelease channel in the example) and compares it with the existingDeckhouseReleases:- Deckhouse deletes later releases (by semver) that have not yet been applied (with the

Pendingstatus). - if the latest releases have been already Deployed, then Deckhouse will hold the current release until a later release appears on the release channel (on the

EarlyAccessrelease channel in the example).

- Deckhouse deletes later releases (by semver) that have not yet been applied (with the

- When switching to a less stable release channel (e.g., from

EarlyAccesstoAlpha), the following actions take place:- Deckhouse downloads release data from the release channel (the

Alpharelease channel in the example) and compares it with the existingDeckhouseReleases. - Then Deckhouse performs the update according to the update parameters.

- Deckhouse downloads release data from the release channel (the

What do I do if Deckhouse fails to retrieve updates from the release channel?

- Make sure that the desired release channel is configured.

- Make sure that the DNS name of the Deckhouse container registry is resolved correctly.

-

Retrieve and compare the IP addresses of the Deckhouse container registry (

registry.deckhouse.io) on one of the nodes and in the Deckhouse pod. They should match.To retrieve the IP address of the Deckhouse container registry on a node, run the following command:

getent ahosts registry.deckhouse.ioExample output:

46.4.145.194 STREAM registry.deckhouse.io 46.4.145.194 DGRAM 46.4.145.194 RAWTo retrieve the IP address of the Deckhouse container registry in a pod, run the following command:

d8 k -n d8-system exec -ti svc/deckhouse-leader -c deckhouse -- getent ahosts registry.deckhouse.ioExample output:

46.4.145.194 STREAM registry.deckhouse.io 46.4.145.194 DGRAM registry.deckhouse.ioIf the retrieved IP addresses do not match, inspect the DNS settings on the host. Specifically, check the list of domains in the

searchparameter of the/etc/resolv.conffile (it affects name resolution in the Deckhouse pod). If thesearchparameter of the/etc/resolv.conffile includes a domain where wildcard record resolution is configured, it may result in incorrect resolution of the IP address of the Deckhouse container registry (see the following example).

How to check the job queue in Deckhouse?

To view the status of all Deckhouse job queues, run the following command:

d8 k -n d8-system exec -it svc/deckhouse-leader -c deckhouse -- deckhouse-controller queue list

Example of the output (queues are empty):

Summary:

- 'main' queue: empty.

- 88 other queues (0 active, 88 empty): 0 tasks.

- no tasks to handle.

To view the status of the main Deckhouse task queue, run the following command:

d8 k -n d8-system exec -it svc/deckhouse-leader -c deckhouse -- deckhouse-controller queue main

Example of the output (38 tasks in the main queue):

Queue 'main': length 38, status: 'run first task'

Example of the output (the main queue is empty):

Queue 'main': length 0, status: 'waiting for task 0s'

Air-gapped environment; working via proxy and third-party registry

How do I configure Deckhouse to use a third-party registry?

This feature is available in the following editions: BE, SE, SE+, EE.

Deckhouse only supports Bearer authentication for container registries.

Tested and guaranteed to work with the following container registries: Nexus, Harbor, Artifactory, Docker Registry, Quay.

Deckhouse can be configured to work with a third-party registry (e.g., a proxy registry inside private environments).

Define the following parameters in the InitConfiguration resource:

imagesRepo: <PROXY_REGISTRY>/<DECKHOUSE_REPO_PATH>/ee. The path to the Deckhouse EE image in the third-party registry, for exampleimagesRepo: registry.deckhouse.io/deckhouse/ee;registryDockerCfg: <BASE64>. Base64-encoded auth credentials of the third-party registry.

Use the following registryDockerCfg if anonymous access to Deckhouse images is allowed in the third-party registry:

{"auths": { "<PROXY_REGISTRY>": {}}}

registryDockerCfg must be Base64-encoded.

Use the following registryDockerCfg if authentication is required to access Deckhouse images in the third-party registry:

{"auths": { "<PROXY_REGISTRY>": {"username":"<PROXY_USERNAME>","password":"<PROXY_PASSWORD>","auth":"<AUTH_BASE64>"}}}

<PROXY_USERNAME>— auth username for<PROXY_REGISTRY>.<PROXY_PASSWORD>— auth password for<PROXY_REGISTRY>.<PROXY_REGISTRY>— registry address:<HOSTNAME>[:PORT].<AUTH_BASE64>— Base64-encoded<PROXY_USERNAME>:<PROXY_PASSWORD>auth string.

registryDockerCfg must be Base64-encoded.

You can use the following script to generate registryDockerCfg:

declare MYUSER='<PROXY_USERNAME>'

declare MYPASSWORD='<PROXY_PASSWORD>'

declare MYREGISTRY='<PROXY_REGISTRY>'

MYAUTH=$(echo -n "$MYUSER:$MYPASSWORD" | base64 -w0)

MYRESULTSTRING=$(echo -n "{\"auths\":{\"$MYREGISTRY\":{\"username\":\"$MYUSER\",\"password\":\"$MYPASSWORD\",\"auth\":\"$MYAUTH\"}}}" | base64 -w0)

echo "$MYRESULTSTRING"

The InitConfiguration resource provides two more parameters for non-standard third-party registry configurations:

registryCA- root CA certificate to validate the third-party registry’s HTTPS certificate (if self-signed certificates are used);registryScheme- registry scheme (HTTPorHTTPS). The default value isHTTPS.

Tips for configuring Nexus

When interacting with a docker repository located in Nexus (e. g. executing docker pull, docker push commands), you must specify the address in the <NEXUS_URL>:<REPOSITORY_PORT>/<PATH> format.

Using the URL value from the Nexus repository options is not acceptable

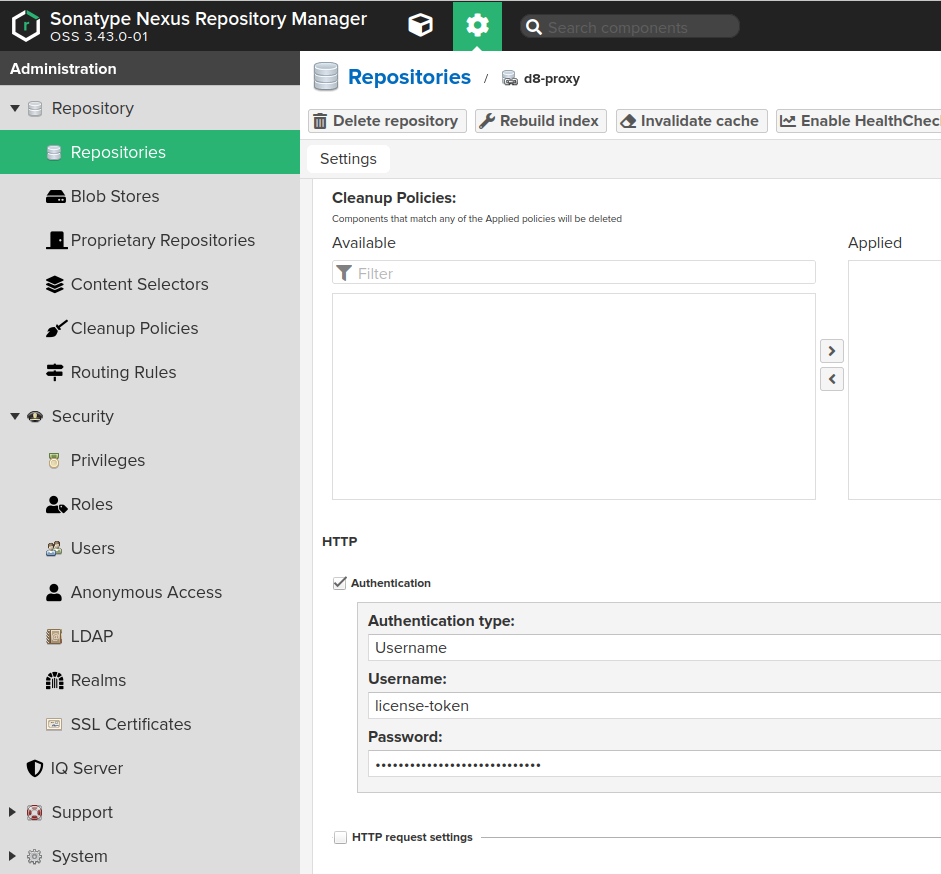

The following requirements must be met if the Nexus repository manager is used:

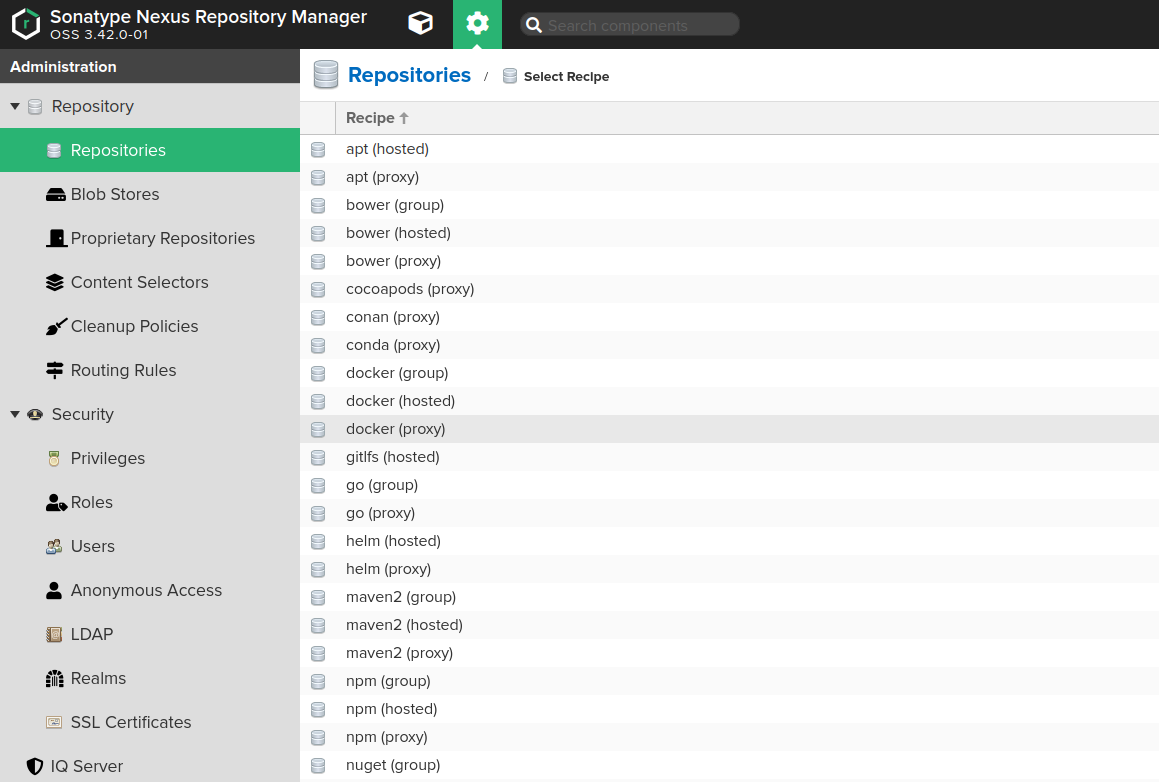

- Docker proxy repository must be pre-created (Administration -> Repository -> Repositories):

- The

Maximum metadata ageparameter is set to0for the repository.

- The

- Access control configured as follows:

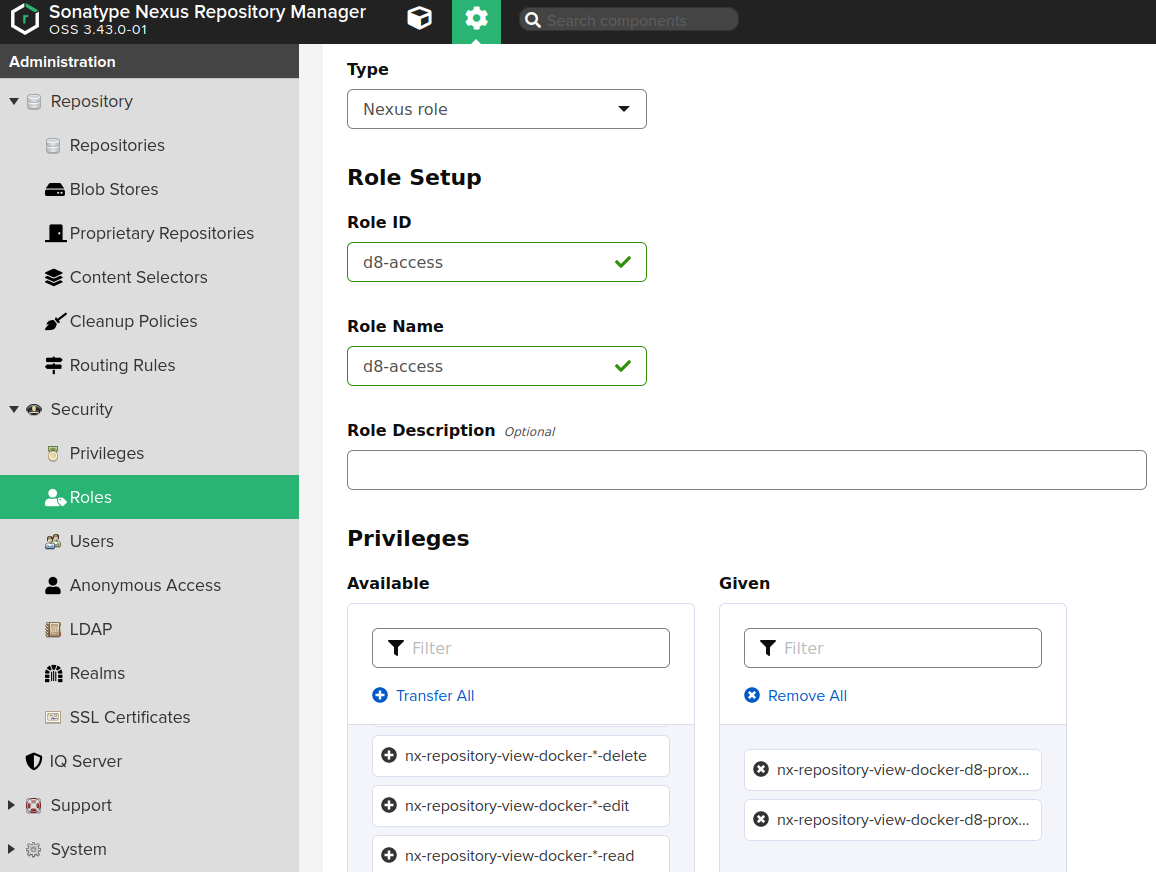

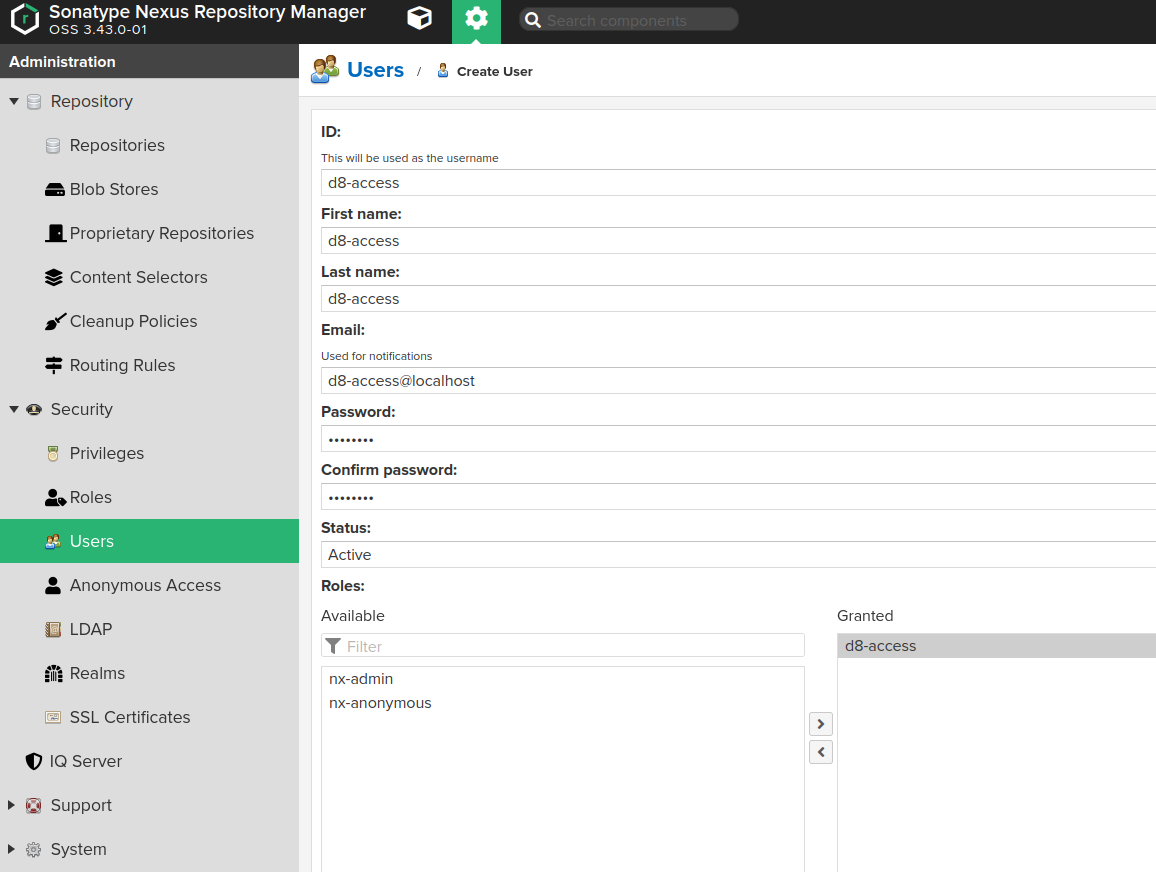

- The Nexus role is created (Administration -> Security -> Roles) with the following permissions:

nx-repository-view-docker-<repository>-browsenx-repository-view-docker-<repository>-read

- A user (Administration -> Security -> Users) with the Nexus role is created.

- The Nexus role is created (Administration -> Security -> Roles) with the following permissions:

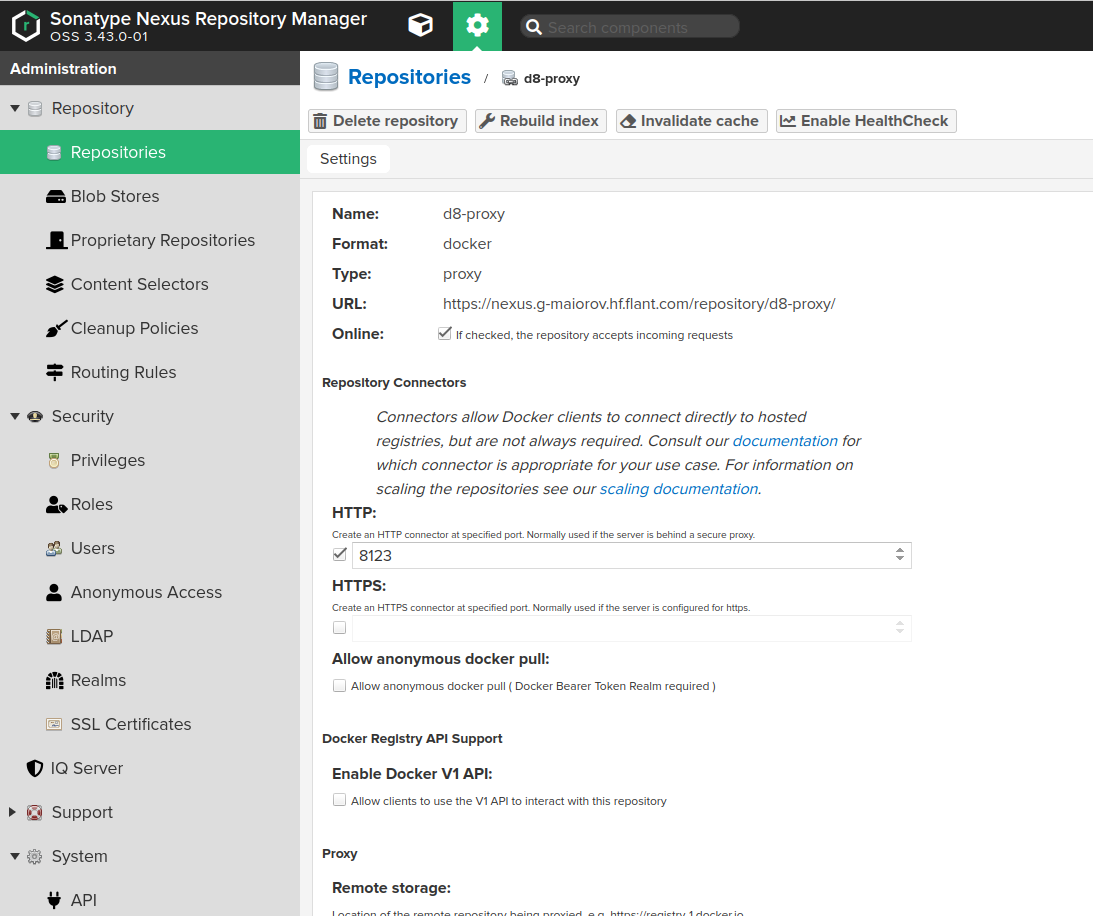

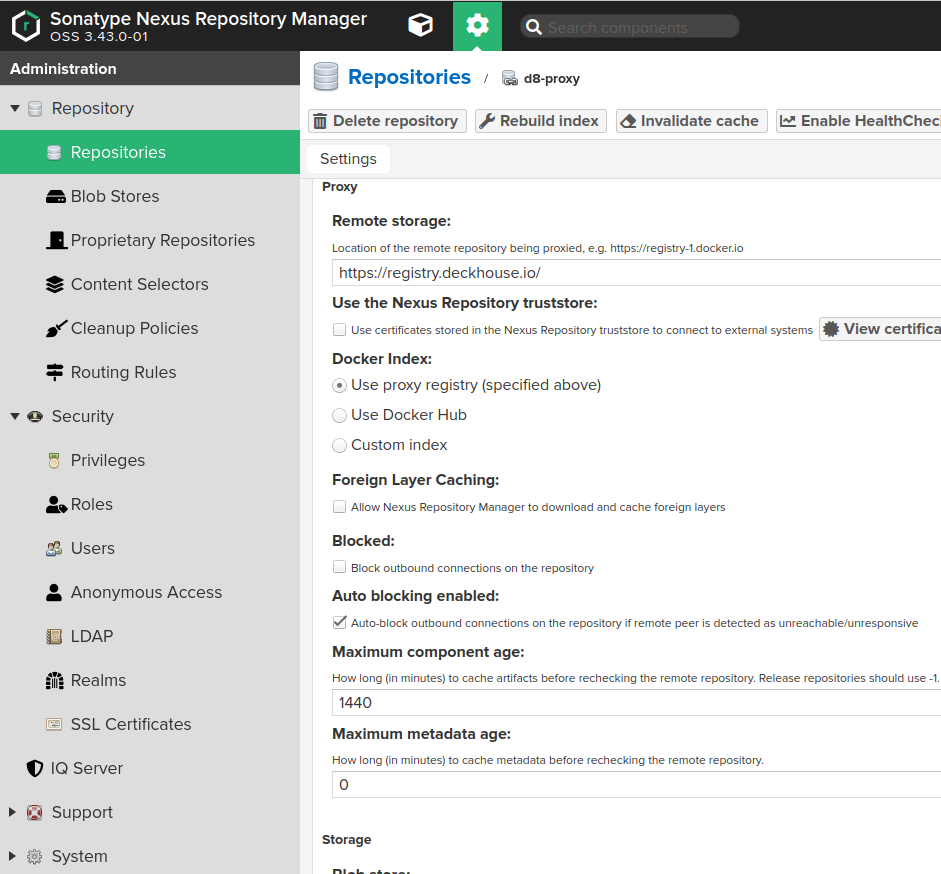

Configuration:

-

Create a docker proxy repository (Administration -> Repository -> Repositories) pointing to the Deckhouse registry

https://registry.deckhouse.io:

- Fill in the fields on the Create page as follows:

Namemust contain the name of the repository you created earlier, e.g.,d8-proxy.Repository Connectors / HTTPorRepository Connectors / HTTPSmust contain a dedicated port for the created repository, e.g.,8123or other.Remote storagemust be set tohttps://registry.deckhouse.io/.- You can disable

Auto blocking enabledandNot found cache enabledfor debugging purposes, otherwise they must be enabled. Maximum Metadata Agemust be set to0.Authenticationmust be enabled if you plan to use a commercial edition of Deckhouse Kubernetes Platform, and the related fields must be set as follows:Authentication Typemust be set toUsername.Usernamemust be set tolicense-token.Passwordmust contain your Deckhouse Kubernetes Platform license key.

- Configure Nexus access control to allow Nexus access to the created repository:

- Create a Nexus role (Administration -> Security -> Roles) with the

nx-repository-view-docker-<repository>-browseandnx-repository-view-docker-<repository>-readpermissions.

- Create a user with the role above granted.

- Create a Nexus role (Administration -> Security -> Roles) with the

Thus, Deckhouse images will be available at https://<NEXUS_HOST>:<REPOSITORY_PORT>/deckhouse/ee:<d8s-version>.

Tips for configuring Harbor

Use the Harbor Proxy Cache feature.

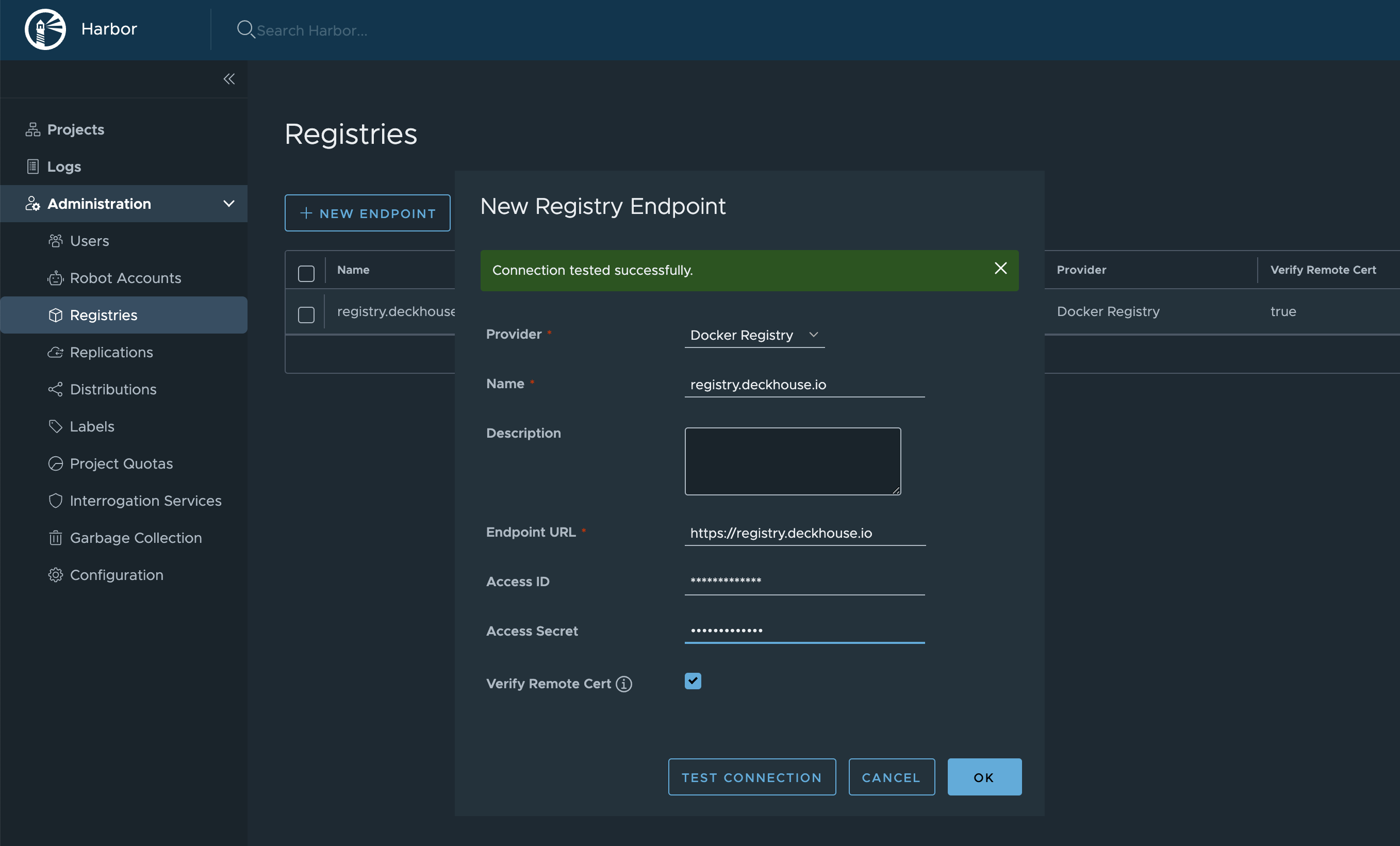

- Create a Registry:

Administration -> Registries -> New Endpoint.Provider:Docker Registry.Name— specify any of your choice.Endpoint URL:https://registry.deckhouse.io.- Specify the

Access IDandAccess Secret(the Deckhouse Kubernetes Platform license key).

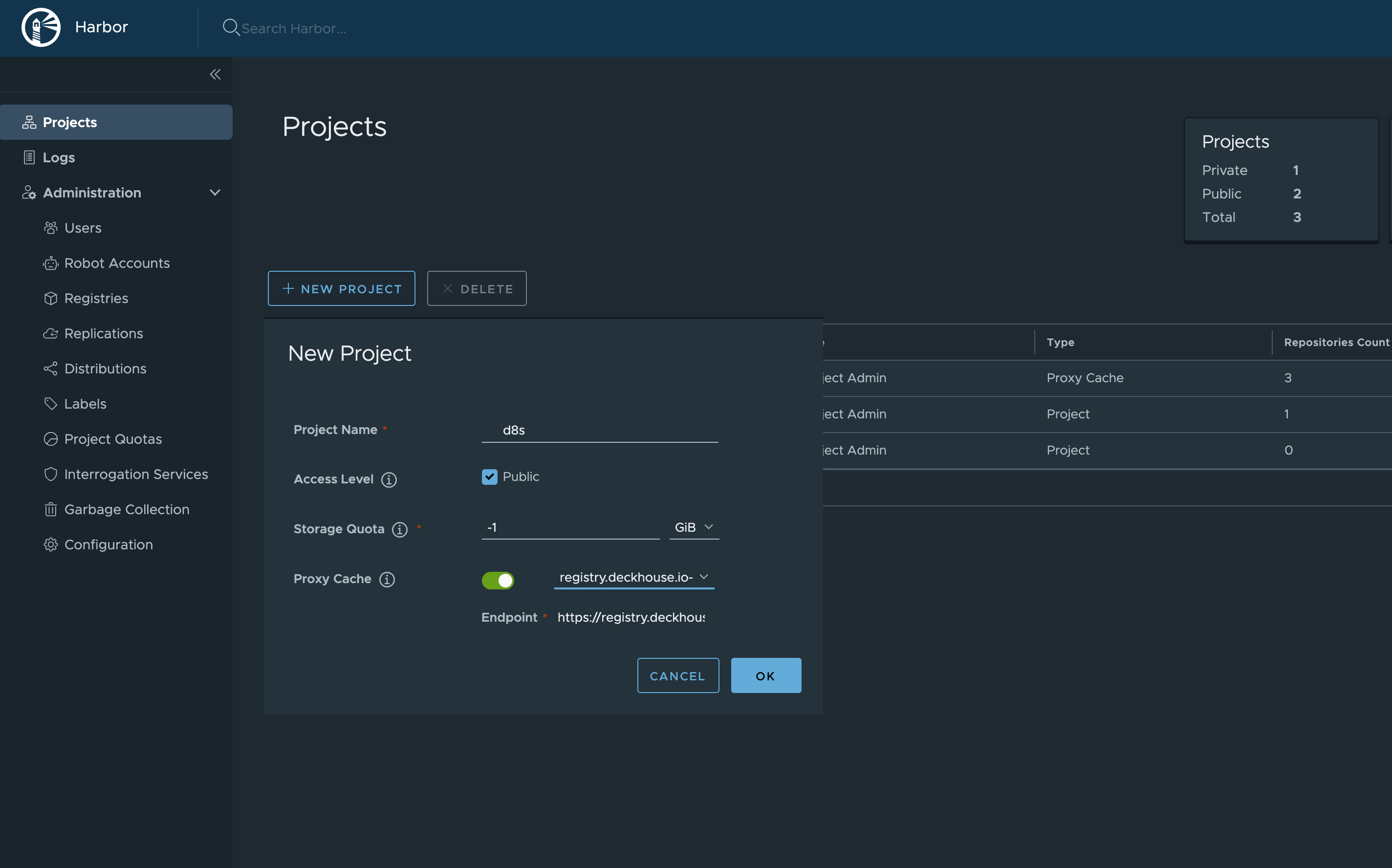

- Create a new Project:

Projects -> New Project.Project Namewill be used in the URL. You can choose any name, for example,d8s.Access Level:Public.Proxy Cache— enable and choose the Registry, created in the previous step.

Thus, Deckhouse images will be available at https://your-harbor.com/d8s/deckhouse/ee:{d8s-version}.

How to generate a self-signed certificate?

When generating certificates manually, it is important to fill out all fields of the certificate request correctly to ensure that the final certificate is issued properly and can be validated across various services.

It is important to follow these guidelines:

-

Specify domain names in the

SAN(Subject Alternative Name) field.The

SANfield is a more modern and commonly used method for specifying the domain names covered by the certificate. Some services no longer consider theCN(Common Name) field as the source for domain names. -

Correctly fill out the

keyUsage,basicConstraints,extendedKeyUsagefields, specifically:-

basicConstraints = CA:FALSEThis field determines whether the certificate is an end-entity certificate or a certification authority (CA) certificate. CA certificates cannot be used as service certificates.

-

keyUsage = digitalSignature, keyEnciphermentThe

keyUsagefield limits the permissible usage scenarios of this key:digitalSignature: Allows the key to be used for signing digital messages and ensuring data integrity.keyEncipherment: Allows the key to be used for encrypting other keys, which is necessary for secure data exchange using TLS (Transport Layer Security).

-

extendedKeyUsage = serverAuthThe

extendedKeyUsagefield specifies additional key usage scenarios required by specific protocols or applications:serverAuth: Indicates that the certificate is intended for server use, authenticating the server to the client during the establishment of a secure connection.

-

It is also recommended to:

-

Issue the certificate for no more than 1 year (365 days).

The validity period of the certificate affects its security. A one-year validity ensures the cryptographic methods remain current and allows for timely certificate updates in case of threats. Furthermore, some modern browsers now reject certificates with a validity period longer than 1 year.

-

Use robust cryptographic algorithms, such as elliptic curve algorithms (including

prime256v1).Elliptic curve algorithms (ECC) provide a high level of security with a smaller key size compared to traditional methods like RSA. This makes the certificates more efficient in terms of performance and secure in the long term.

-

Do not specify domains in the

CN(Common Name) field.Historically, the

CNfield was used to specify the primary domain name for which the certificate was issued. However, modern standards, such as RFC 2818, recommend using theSAN(Subject Alternative Name) field for this purpose. If the certificate is intended for multiple domain names listed in theSANfield, specifying one of the domains additionally inCNcan cause a validation error in some services when accessing domains not listed inCN. If non-domain-related information is specified inCN(for example, an identifier or service name), the certificate will also extend to these names, which could be exploited for malicious purposes.

Certificate generation example

To generate a certificate, we’ll use the openssl utility.

-

Fill in the

cert.cnfconfiguration file:[ req ] default_bits = 2048 default_md = sha256 prompt = no distinguished_name = dn req_extensions = req_ext [ dn ] C = GB ST = London L = London O = Example Company OU = IT Department # CN = Do not specify the CN field. [ req_ext ] subjectAltName = @alt_names [ alt_names ] # Specify all domain names. DNS.1 = example.co.uk DNS.2 = www.example.co.uk DNS.3 = api.example.co.uk # Specify IP addresses (if required). IP.1 = 192.0.2.1 IP.2 = 192.0.4.1 [ v3_ca ] basicConstraints = CA:FALSE keyUsage = digitalSignature, keyEncipherment extendedKeyUsage = serverAuth [ v3_req ] basicConstraints = CA:FALSE keyUsage = digitalSignature, keyEncipherment extendedKeyUsage = serverAuth subjectAltName = @alt_names # Elliptic curve parameters. [ ec_params ] name = prime256v1 -

Generate an elliptic curve key:

openssl ecparam -genkey -name prime256v1 -noout -out ec_private_key.pem -

Create a certificate signing request:

openssl req -new -key ec_private_key.pem -out example.csr -config cert.cnf -

Generate a self-signed certificate:

openssl x509 -req -in example.csr -signkey ec_private_key.pem -out example.crt -days 365 -extensions v3_req -extfile cert.cnf

Manually uploading Deckhouse Kubernetes Platform, vulnerability scanner DB and Deckhouse modules to private registry

The d8 mirror command group is not available for Community Edition (CE) and Basic Edition (BE).

Check releases.deckhouse.io for the current status of the release channels.

-

Pull Deckhouse images using the

d8 mirror pullcommand.By default,

d8 mirrorpulls only the latest available patch versions for every actual Deckhouse release, latest enterprise security scanner databases (if your edition supports it) and the current set of officially supplied modules. For example, for Deckhouse 1.59, only version1.59.12will be pulled, since this is sufficient for updating Deckhouse from 1.58 to 1.59.Run the following command (specify the edition code and the license key) to download actual images:

d8 mirror pull \ --source='registry.deckhouse.io/deckhouse/<EDITION>' \ --license='<LICENSE_KEY>' /home/user/d8-bundlewhere:

<EDITION>— the edition code of the Deckhouse Kubernetes Platform (for example,ee,se,se-plus).<LICENSE_KEY>— Deckhouse Kubernetes Platform license key./home/user/d8-bundle— the directory to store the resulting bundle into. It will be created if not present.

If the loading of images is interrupted, rerunning the command will resume the loading if no more than a day has passed since it stopped.

You can also use the following command options:

--no-pull-resume— to forcefully start the download from the beginning;--no-platform— to skip downloading the Deckhouse Kubernetes Platform package (platform.tar);--no-modules— to skip downloading modules packages (module-*.tar);--no-security-db— to skip downloading security scanner databases (security.tar);--since-version=X.Y— to download all versions of Deckhouse starting from the specified minor version. This parameter will be ignored if a version higher than the version on the Rock Solid updates channel is specified. This parameter cannot be used simultaneously with the--deckhouse-tagparameter;--deckhouse-tag— to download only a specific build of Deckhouse (without considering release channels). This parameter cannot be used simultaneously with the--since-versionparameter;--include-module/-i=name[@Major.Minor]— to download only a specific whitelist of modules (and optionally their minimal versions). Specify multiple times to whitelist more modules. This flags are ignored if used with--no-modules.--exclude-module/-e=name— to skip downloading of a specific blacklisted set of modules. Specify multiple times to blacklist more modules. Ignored if--no-modulesor--include-moduleare used.--modules-path-suffix— to change the suffix of the module repository path in the main Deckhouse repository. By default, the suffix is/modules. (for example, the full path to the repository with modules will look likeregistry.deckhouse.io/deckhouse/EDITION/moduleswith this default).--gost-digest— for calculating the checksums of the bundle in the format of GOST R 34.11-2012 (Streebog). The checksum for each package will be displayed and written to a file with the extension.tar.gostsumin the folder with the package;--source— to specify the address of the Deckhouse source registry;- To authenticate in the official Deckhouse image registry, you need to use a license key and the

--licenseparameter; - To authenticate in a third-party registry, you need to use the

--source-loginand--source-passwordparameters;

- To authenticate in the official Deckhouse image registry, you need to use a license key and the

--images-bundle-chunk-size=N— to specify the maximum file size (in GB) to split the image archive into. As a result of the operation, instead of a single file archive, a set of.chunkfiles will be created (e.g.,d8.tar.NNNN.chunk). To upload images from such a set of files, specify the file name without the.NNNN.chunksuffix in thed8 mirror pushcommand (e.g.,d8.tarfor files liked8.tar.NNNN.chunk);--tmp-dir— path to a temporary directory to use for image pulling and pushing. All processing is done in this directory, so make sure there is enough free disk space to accommodate the entire bundle you are downloading. By default,.tmpsubdirectory under the bundle directory is used.

Additional configuration options for the

d8 mirrorfamily of commands are available as environment variables:HTTP_PROXY/HTTPS_PROXY— URL of the proxy server for HTTP(S) requests to hosts that are not listed in the variable$NO_PROXY;NO_PROXY— comma-separated list of hosts to exclude from proxying. Supported value formats include IP addresses (1.2.3.4), CIDR notations (1.2.3.4/8), domains, and the asterisk character (*). The IP addresses and domain names can also include a literal port number (1.2.3.4:80). The domain name matches that name and all the subdomains. The domain name with a leading.matches subdomains only. For example,foo.commatchesfoo.comandbar.foo.com;.y.commatchesx.y.combut does not matchy.com. A single asterisk*indicates that no proxying should be done;SSL_CERT_FILE— path to the SSL certificate. If the variable is set, system certificates are not used;SSL_CERT_DIR— list of directories to search for SSL certificate files, separated by a colon. If set, system certificates are not used. See more…;MIRROR_BYPASS_ACCESS_CHECKS— set to1to skip validation of registry credentials;

Example of a command to download all versions of Deckhouse EE starting from version 1.59 (provide the license key):

d8 mirror pull \ --license='<LICENSE_KEY>' \ --source='registry.deckhouse.io/deckhouse/ee' \ --since-version=1.59 /home/user/d8-bundleExample of a command to download versions of Deckhouse SE for every release-channel available:

d8 mirror pull \ --license='<LICENSE_KEY>' \ --source='registry.deckhouse.io/deckhouse/se' \ /home/user/d8-bundleExample of a command to download all versions of Deckhouse hosted on a third-party registry:

d8 mirror pull \ --source='corp.company.com:5000/sys/deckhouse' \ --source-login='<USER>' --source-password='<PASSWORD>' /home/user/d8-bundleExample of a command to download latest vulnerability scanner databases (if available for your deckhouse edition):

d8 mirror pull \ --license='<LICENSE_KEY>' \ --source='registry.deckhouse.io/deckhouse/ee' \ --no-platform --no-modules /home/user/d8-bundleExample of a command to download all of Deckhouse modules available in registry:

d8 mirror pull \ --license='<LICENSE_KEY>' \ --source='registry.deckhouse.io/deckhouse/ee' \ --no-platform --no-security-db /home/user/d8-bundleExample of a command to download

strongholdandsecrets-store-integrationDeckhouse modules:d8 mirror pull \ --license='<LICENSE_KEY>' \ --source='registry.deckhouse.io/deckhouse/ee' \ --no-platform --no-security-db \ --include-module stronghold \ --include-module secrets-store-integration \ /home/user/d8-bundle -

Upload the bundle with the pulled Deckhouse images to a host with access to the air-gapped registry and install the Deckhouse CLI tool onto it.

-

Push the images to the air-gapped registry using the

d8 mirror pushcommand.The

d8 mirror pushcommand uploads images from all packages present in the given directory to the repository. If you need to upload only some specific packages to the repository, you can either run the command for each required package, passing in the direct path to the tar package instead of the directory, or by removing the.tarextension from unnecessary packages or moving them outside the directory.Example of a command for pushing images from the

/mnt/MEDIA/d8-imagesdirectory (specify authorization data if necessary):d8 mirror push /mnt/MEDIA/d8-images 'corp.company.com:5000/sys/deckhouse' \ --registry-login='<USER>' --registry-password='<PASSWORD>'Before pushing images, make sure that the path for loading into the registry exists (

/sys/deckhousein the example above), and the account being used has write permissions. Harbor users, please note that you will not be able to upload images to the project root; instead use a dedicated repository in the project to host Deckhouse images. -

Once pushing images to the air-gapped private registry is complete, you are ready to install Deckhouse from it. Refer to the Getting started guide.

When launching the installer, use a repository where Deckhouse images have previously been loaded instead of official Deckhouse registry. For example, the address for launching the installer will look like

corp.company.com:5000/sys/deckhouse/install:stableinstead ofregistry.deckhouse.io/deckhouse/ee/install:stable.During installation, add your registry address and authorization data to the InitConfiguration resource (the imagesRepo and registryDockerCfg parameters; you might refer to step 3 of the Getting started guide as well).

How do I switch a running Deckhouse cluster to use a third-party registry?

Using a registry other than registry.deckhouse.io is only available in a commercial edition of Deckhouse Kubernetes Platform.

To switch the Deckhouse cluster to using a third-party registry, follow these steps:

- Run

deckhouse-controller helper change-registryinside the Deckhouse Pod with the new registry settings.-

Example:

d8 k -n d8-system exec -ti svc/deckhouse-leader -c deckhouse -- deckhouse-controller helper change-registry --user MY-USER --password MY-PASSWORD registry.example.com/deckhouse/ee -

If the registry uses a self-signed certificate, put the root CA certificate that validates the registry’s HTTPS certificate to file

/tmp/ca.crtin the Deckhouse Pod and add the--ca-file /tmp/ca.crtoption to the script or put the content of CA into a variable as follows:CA_CONTENT=$(cat <<EOF -----BEGIN CERTIFICATE----- CERTIFICATE -----END CERTIFICATE----- -----BEGIN CERTIFICATE----- CERTIFICATE -----END CERTIFICATE----- EOF ) d8 k -n d8-system exec svc/deckhouse-leader -c deckhouse -- bash -c "echo '$CA_CONTENT' > /tmp/ca.crt && deckhouse-controller helper change-registry --ca-file /tmp/ca.crt --user MY-USER --password MY-PASSWORD registry.example.com/deckhouse/ee" -

To view the list of available keys of the

deckhouse-controller helper change-registrycommand, run the following command:d8 k -n d8-system exec -ti svc/deckhouse-leader -c deckhouse -- deckhouse-controller helper change-registry --helpExample output:

usage: deckhouse-controller helper change-registry [<flags>] <new-registry> Change registry for deckhouse images. Flags: --help Show context-sensitive help (also try --help-long and --help-man). --user=USER User with pull access to registry. --password=PASSWORD Password/token for registry user. --ca-file=CA-FILE Path to registry CA. --scheme=SCHEME Used scheme while connecting to registry, "http" or "https". --dry-run Don't change deckhouse resources, only print them. --new-deckhouse-tag=NEW-DECKHOUSE-TAG New tag that will be used for deckhouse deployment image (by default current tag from deckhouse deployment will be used). Args: <new-registry> Registry that will be used for deckhouse images (example: registry.deckhouse.io/deckhouse/ce). By default, https will be used, if you need http - provide '--scheme' flag with http value

-

- Wait for the Deckhouse Pod to become

Ready. Restart Deckhouse Pod if it will be inImagePullBackoffstate. - Wait for bashible to apply the new settings on the master node. The bashible log on the master node (

journalctl -u bashible) should contain the messageConfiguration is in sync, nothing to do. - If you want to disable Deckhouse automatic updates, remove the releaseChannel parameter from the

deckhousemodule configuration. -

Check if there are Pods with original registry in cluster (if there are — restart them):

d8 k get pods -A -o json | jq -r '.items[] | select(.spec.containers[] | select(.image | startswith("registry.deckhouse"))) | .metadata.namespace + "\t" + .metadata.name' | sort | uniq

How to bootstrap a cluster and run Deckhouse without the usage of release channels?

This method should only be used if there are no release channel images in your air-gapped registry.

If you want to install Deckhouse with automatic updates disabled:

- Use the installer image tag of the corresponding version. For example, if you want to install the

v1.44.3release, use theyour.private.registry.com/deckhouse/install:v1.44.3image. - Specify the corresponding version number in the deckhouse.devBranch parameter in the InitConfiguration resource.

Do not specify the deckhouse.releaseChannel parameter in the InitConfiguration resource.

If you want to disable automatic updates for an already installed Deckhouse (including patch release updates), remove the releaseChannel parameter from the deckhouse module configuration.

How do I use a proxy server?

This feature is available in the following editions: BE, SE, SE+, EE.

Use the proxy parameter of the ClusterConfiguration resource to configure proxy usage.

An example:

apiVersion: deckhouse.io/v1

kind: ClusterConfiguration

clusterType: Cloud

cloud:

provider: OpenStack

prefix: main

podSubnetCIDR: 10.111.0.0/16

serviceSubnetCIDR: 10.222.0.0/16

kubernetesVersion: "Automatic"

cri: "Containerd"

clusterDomain: "cluster.local"

proxy:

httpProxy: "http://user:password@proxy.company.my:3128"

httpsProxy: "https://user:password@proxy.company.my:8443"

How do I set up proxy variable autoloading in the CLI?

Since DKP v1.67, the file /etc/profile.d/d8-system-proxy.sh, which sets proxy variables for users, is no longer configurable. To autoload proxy variables for users at the CLI, use the NodeGroupConfiguration resource:

apiVersion: deckhouse.io/v1alpha1

kind: NodeGroupConfiguration

metadata:

name: profile-proxy.sh

spec:

bundles:

- '*'

nodeGroups:

- '*'

weight: 99

content: |

{{- if .proxy }}

{{- if .proxy.httpProxy }}

export HTTP_PROXY={{ .proxy.httpProxy | quote }}

export http_proxy=${HTTP_PROXY}

{{- end }}

{{- if .proxy.httpsProxy }}

export HTTPS_PROXY={{ .proxy.httpsProxy | quote }}

export https_proxy=${HTTPS_PROXY}

{{- end }}

{{- if .proxy.noProxy }}

export NO_PROXY={{ .proxy.noProxy | join "," | quote }}

export no_proxy=${NO_PROXY}

{{- end }}

bb-sync-file /etc/profile.d/profile-proxy.sh - << EOF

export HTTP_PROXY=${HTTP_PROXY}

export http_proxy=${HTTP_PROXY}

export HTTPS_PROXY=${HTTPS_PROXY}

export https_proxy=${HTTPS_PROXY}

export NO_PROXY=${NO_PROXY}

export no_proxy=${NO_PROXY}

EOF

{{- else }}

rm -rf /etc/profile.d/profile-proxy.sh

{{- end }}

Changing the configuration

To apply node configuration changes, you need to run the dhctl converge using the Deckhouse installer. This command synchronizes the state of the nodes with the specified configuration.

How do I change the configuration of a cluster?

The general cluster parameters are stored in the ClusterConfiguration structure.

To change the general cluster parameters, run the command:

d8 platform edit cluster-configuration

After saving the changes, Deckhouse will bring the cluster configuration to the state according to the changed configuration. Depending on the size of the cluster, this may take some time.

How do I change the configuration of a cloud provider in a cluster?

Cloud provider setting of a cloud of hybrid cluster are stored in the <PROVIDER_NAME>ClusterConfiguration structure, where <PROVIDER_NAME> — name/code of the cloud provider. E.g., for an OpenStack provider, the structure will be called OpenStackClusterConfiguration.

Regardless of the cloud provider used, its settings can be changed using the following command:

d8 p edit provider-cluster-configuration

How do I change the configuration of a static cluster?

Settings of a static cluster are stored in the StaticClusterConfiguration structure.

To change the settings of a static cluster, run the command:

d8 p edit static-cluster-configuration

How to switch Deckhouse edition to CE/BE/SE/SE+/EE?

- The functionality of this guide is validated for Deckhouse versions starting from

v1.70. If your version is older, use the corresponding documentation. - For commercial editions, you need a valid license key that supports the desired edition. If necessary, you can request a temporary key.

- The guide assumes the use of the public container registry address:

registry.deckhouse.io. If you are using a different container registry address, modify the commands accordingly or refer to the guide on switching Deckhouse to use a different registry. - The Deckhouse CE/BE/SE/SE+ editions do not support the cloud providers

dynamix,openstack,VCD, andvSphere(vSphere is supported in SE+) and a number of modules. A detailed comparison is available in the documentation. - All commands are executed on the master node of the existing cluster with

rootuser.

How to switch using the registry module?

-

Make sure the cluster has been migrated to be managed by the

registrymodule.

If the cluster is not managed by theregistrymodule, proceed to the instruction. -

Prepare variables for the license token and new edition name:

It is not necessary to fill the

NEW_EDITIONvariable when switching to Deckhouse CE edition. TheNEW_EDITIONvariable should match your desired Deckhouse edition. For example, to switch to:- CE, the variable should be

ce; - BE, the variable should be

be; - SE, the variable should be

se; - SE+, the variable should be

se-plus; - EE, the variable should be

ee.

NEW_EDITION=<PUT_YOUR_EDITION_HERE> LICENSE_TOKEN=<PUT_YOUR_LICENSE_TOKEN_HERE> - CE, the variable should be

-

Ensure the Deckhouse queue is empty and error-free.

-

Start a temporary pod for the new Deckhouse edition to obtain current digests and a list of modules:

for the CE edition:

DECKHOUSE_VERSION=$(d8 k -n d8-system get deploy deckhouse -ojson | jq -r '.spec.template.spec.containers[] | select(.name == "deckhouse") | .image' | awk -F: '{print $NF}') d8 k run $NEW_EDITION-image --image=registry.deckhouse.ru/deckhouse/$NEW_EDITION/install:$DECKHOUSE_VERSION --command sleep -- infinityfor other editions:

d8 k create secret docker-registry $NEW_EDITION-image-pull-secret \ --docker-server=registry.deckhouse.ru \ --docker-username=license-token \ --docker-password=${LICENSE_TOKEN} DECKHOUSE_VERSION=$(d8 k -n d8-system get deploy deckhouse -ojson | jq -r '.spec.template.spec.containers[] | select(.name == "deckhouse") | .image' | awk -F: '{print $NF}') d8 k run $NEW_EDITION-image \ --image=registry.deckhouse.ru/deckhouse/$NEW_EDITION/install:$DECKHOUSE_VERSION \ --overrides="{\"spec\": {\"imagePullSecrets\":[{\"name\": \"$NEW_EDITION-image-pull-secret\"}]}}" \ --command sleep -- infinityOnce the pod is in

Runningstate, execute the following commands:NEW_EDITION_MODULES=$(d8 k exec $NEW_EDITION-image -- ls -l deckhouse/modules/ | grep -oE "\d.*-\w*" | awk {'print $9'} | cut -c5-) USED_MODULES=$(d8 k get modules -o custom-columns=NAME:.metadata.name,SOURCE:.properties.source,STATE:.properties.state,ENABLED:.status.phase | grep Embedded | grep -E 'Enabled|Ready' | awk {'print $1'}) MODULES_WILL_DISABLE=$(echo $USED_MODULES | tr ' ' '\n' | grep -Fxv -f <(echo $NEW_EDITION_MODULES | tr ' ' '\n')) -

Verify that the modules used in the cluster are supported in the desired edition. To see the list of modules not supported in the new edition and will be disabled:

echo $MODULES_WILL_DISABLECheck the list to ensure the functionality of these modules is not in use in your cluster and you are ready to disable them.

Disable the modules not supported by the new edition:

echo $MODULES_WILL_DISABLE | tr ' ' '\n' | awk {'print "d8 platform module disable",$1'} | bashWait for the Deckhouse pod to reach

Readystate and ensure all tasks in the queue are completed. -

Delete the created Secret and Pod:

d8 k delete pod/$NEW_EDITION-image d8 k delete secret/$NEW_EDITION-image-pull-secret -

Perform the switch to the new edition. To do this, specify the following parameter in the

deckhouseModuleConfig. For detailed configuration, refer to thedeckhousemodule documentation.--- # Example for Direct mode apiVersion: deckhouse.io/v1alpha1 kind: ModuleConfig metadata: name: deckhouse spec: version: 1 enabled: true settings: registry: mode: Direct direct: # Relax mode is used to check for the presence of the current Deckhouse version in the specified registry. # This mode must be used to switch between editions. checkMode: Relax # Specify your value for <NEW_EDITION>. imagesRepo: registry.deckhouse.ru/deckhouse/<NEW_EDITION> scheme: HTTPS # Specify your value for <LICENSE_TOKEN>. # If switching to the CE edition, remove this parameter. license: <LICENSE_TOKEN> --- # Example for Unmanaged mode. apiVersion: deckhouse.io/v1alpha1 kind: ModuleConfig metadata: name: deckhouse spec: version: 1 enabled: true settings: registry: mode: Unmanaged unmanaged: # Relax mode is used to check for the presence of the current Deckhouse version in the specified registry. # This mode must be used to switch between editions. checkMode: Relax # Specify your value for <NEW_EDITION>. imagesRepo: registry.deckhouse.ru/deckhouse/<NEW_EDITION> scheme: HTTPS # Specify your value for <LICENSE_TOKEN>. # If switching to the CE edition, remove this parameter. license: <LICENSE_TOKEN> -

Wait for the registry to switch. To verify the switch progress, follow the instruction.

Example output:

conditions: - lastTransitionTime: "..." message: |- Mode: Relax registry.deckhouse.ru: all 1 items are checked reason: Ready status: "True" type: RegistryContainsRequiredImages # ... - lastTransitionTime: "..." message: "" reason: "" status: "True" type: Ready -

After the switch, remove the

checkMode: Relaxparameter from thedeckhouseModuleConfig to revert to the default check mode.

Removing this parameter will trigger a check for the presence of critical components in the registry. -

Wait for the check to complete by following the instruction.

Example output:

conditions: - lastTransitionTime: "..." message: |- Mode: Default registry.deckhouse.ru: all 155 items are checked reason: Ready status: "True" type: RegistryContainsRequiredImages # ... - lastTransitionTime: "..." message: "" reason: "" status: "True" type: Ready -

Check if there are any pods with the Deckhouse old edition address left in the cluster, where

<YOUR-PREVIOUS-EDITION>your previous edition name:for Unmanaged mode:

d8 k get pods -A -o json | jq -r '.items[] | select(.spec.containers[] | select(.image | contains("deckhouse.ru/deckhouse/<YOUR-PREVIOUS-EDITION>"))) | .metadata.namespace + "\t" + .metadata.name' | sort | uniqFor other modes that use a fixed registry address.

This check does not take external modules into account:# Get the list of valid digest values from the images_digests.json file inside Deckhouse. IMAGES_DIGESTS=$(d8 k -n d8-system exec -i svc/deckhouse-leader -c deckhouse -- cat /deckhouse/modules/images_digests.json | jq -r '.[][]' | sort -u) # Check for Pods using Deckhouse images from `registry.d8-system.svc:5001/system/deckhouse` # with a digest that is NOT present in the list of valid digest values (IMAGES_DIGESTS). d8 k get pods -A -o json | jq -r --argjson digests "$(printf '%s\n' $IMAGES_DIGESTS | jq -R . | jq -s .)" ' .items[] | {name: .metadata.name, namespace: .metadata.namespace, containers: .spec.containers} | select(.containers != null) | select( .containers[] | select(.image | test("registry.d8-system.svc:5001/system/deckhouse") and test("@sha256:")) | .image as $img | ($img | split("@") | last) as $digest | ($digest | IN($digests[]) | not) ) | .namespace + "\t" + .name ' | sort -u

How to switch without using the registry module?

-

Before you begin, disable the

registrymodule by following instruction. -

Prepare variables for the license token and new edition name:

It is not necessary to fill the

NEW_EDITIONandAUTH_STRINGvariables when switching to Deckhouse CE edition. TheNEW_EDITIONvariable should match your desired Deckhouse edition. For example, to switch to:- CE, the variable should be

ce; - BE, the variable should be

be; - SE, the variable should be

se; - SE+, the variable should be

se-plus; - EE, the variable should be

ee.

NEW_EDITION=<PUT_YOUR_EDITION_HERE> LICENSE_TOKEN=<PUT_YOUR_LICENSE_TOKEN_HERE> AUTH_STRING="$(echo -n license-token:${LICENSE_TOKEN} | base64 )" - CE, the variable should be

-

Ensure the Deckhouse queue is empty and error-free.

-

Create a

NodeGroupConfigurationresource for temporary authorization inregistry.deckhouse.io:Before creating a resource, refer to the section How to add configuration for an additional registry

Skip this step if switching to Deckhouse CE.

d8 k apply -f - <<EOF apiVersion: deckhouse.io/v1alpha1 kind: NodeGroupConfiguration metadata: name: containerd-$NEW_EDITION-config.sh spec: nodeGroups: - '*' bundles: - '*' weight: 30 content: | _on_containerd_config_changed() { bb-flag-set containerd-need-restart } bb-event-on 'containerd-config-file-changed' '_on_containerd_config_changed' mkdir -p /etc/containerd/conf.d bb-sync-file /etc/containerd/conf.d/$NEW_EDITION-registry.toml - containerd-config-file-changed << "EOF_TOML" [plugins] [plugins."io.containerd.grpc.v1.cri"] [plugins."io.containerd.grpc.v1.cri".registry.configs] [plugins."io.containerd.grpc.v1.cri".registry.configs."registry.deckhouse.io".auth] auth = "$AUTH_STRING" EOF_TOML EOFWait for the

/etc/containerd/conf.d/$NEW_EDITION-registry.tomlfile to appear on the nodes and for bashible synchronization to complete. To track the synchronization status, check theUPTODATEvalue (the number of nodes in this status should match the total number of nodes (NODES) in the group):d8 k get ng -o custom-columns=NAME:.metadata.name,NODES:.status.nodes,READY:.status.ready,UPTODATE:.status.upToDate -wExample output:

NAME NODES READY UPTODATE master 1 1 1 worker 2 2 2Also, a message stating

Configuration is in sync, nothing to doshould appear in the systemd service log for bashible by executing the following command:journalctl -u bashible -n 5Example output:

Aug 21 11:04:28 master-ee-to-se-0 bashible.sh[53407]: Configuration is in sync, nothing to do. Aug 21 11:04:28 master-ee-to-se-0 bashible.sh[53407]: Annotate node master-ee-to-se-0 with annotation node.deckhouse.io/configuration-checksum=9cbe6db6c91574b8b732108a654c99423733b20f04848d0b4e1e2dadb231206a Aug 21 11:04:29 master ee-to-se-0 bashible.sh[53407]: Successful annotate node master-ee-to-se-0 with annotation node.deckhouse.io/configuration-checksum=9cbe6db6c91574b8b732108a654c99423733b20f04848d0b4e1e2dadb231206a Aug 21 11:04:29 master-ee-to-se-0 systemd[1]: bashible.service: Deactivated successfully. -

Start a temporary pod for the new Deckhouse edition to obtain current digests and a list of modules:

DECKHOUSE_VERSION=$(d8 k -n d8-system get deploy deckhouse -ojson | jq -r '.spec.template.spec.containers[] | select(.name == "deckhouse") | .image' | awk -F: '{print $NF}') d8 k run $NEW_EDITION-image --image=registry.deckhouse.io/deckhouse/$NEW_EDITION/install:$DECKHOUSE_VERSION --command sleep --infinity -

Once the pod is in

Runningstate, execute the following commands:NEW_EDITION_MODULES=$(d8 k exec $NEW_EDITION-image -- ls -l deckhouse/modules/ | grep -oE "\d.*-\w*" | awk {'print $9'} | cut -c5-) USED_MODULES=$(d8 k get modules -o custom-columns=NAME:.metadata.name,SOURCE:.properties.source,STATE:.properties.state,ENABLED:.status.phase | grep Embedded | grep -E 'Enabled|Ready' | awk {'print $1'}) MODULES_WILL_DISABLE=$(echo $USED_MODULES | tr ' ' '\n' | grep -Fxv -f <(echo $NEW_EDITION_MODULES | tr ' ' '\n')) -

Verify that the modules used in the cluster are supported in the desired edition. To see the list of modules not supported in the new edition and will be disabled:

echo $MODULES_WILL_DISABLECheck the list to ensure the functionality of these modules is not in use in your cluster and you are ready to disable them.

Disable the modules not supported by the new edition:

echo $MODULES_WILL_DISABLE | tr ' ' '\n' | awk {'print "d8 platform module disable",$1'} | bashWait for the Deckhouse pod to reach

Readystate and ensure all tasks in the queue are completed. -

Execute the

deckhouse-controller helper change-registrycommand from the Deckhouse pod with the new edition parameters:To switch to BE/SE/SE+/EE editions:

DOCKER_CONFIG_JSON=$(echo -n "{\"auths\": {\"registry.deckhouse.io\": {\"username\": \"license-token\", \"password\": \"${LICENSE_TOKEN}\", \"auth\": \"${AUTH_STRING}\"}}}" | base64 -w 0) d8 k --as system:sudouser -n d8-cloud-instance-manager patch secret deckhouse-registry --type merge --patch="{\"data\":{\".dockerconfigjson\":\"$DOCKER_CONFIG_JSON\"}}" d8 k -n d8-system exec -ti svc/deckhouse-leader -c deckhouse -- deckhouse-controller helper change-registry --user=license-token --password=$LICENSE_TOKEN --new-deckhouse-tag=$DECKHOUSE_VERSION registry.deckhouse.io/deckhouse/$NEW_EDITIONTo switch to CE edition:

d8 k -n d8-system exec -ti svc/deckhouse-leader -c deckhouse -- deckhouse-controller helper change-registry --new-deckhouse-tag=$DECKHOUSE_VERSION registry.deckhouse.io/deckhouse/ce -

Check if there are any pods with the Deckhouse old edition address left in the cluster, where

<YOUR-PREVIOUS-EDITION>your previous edition name:d8 k get pods -A -o json | jq -r '.items[] | select(.spec.containers[] | select(.image | contains("deckhouse.io/deckhouse/<YOUR-PREVIOUS-EDITION>"))) | .metadata.namespace + "\t" + .metadata.name' | sort | uniq -

Delete temporary files, the

NodeGroupConfigurationresource, and variables:Skip this step if switching to Deckhouse CE.

d8 k delete ngc containerd-$NEW_EDITION-config.sh d8 k delete pod $NEW_EDITION-image d8 k apply -f - <<EOF apiVersion: deckhouse.io/v1alpha1 kind: NodeGroupConfiguration metadata: name: del-temp-config.sh spec: nodeGroups: - '*' bundles: - '*' weight: 90 content: | if [ -f /etc/containerd/conf.d/$NEW_EDITION-registry.toml ]; then rm -f /etc/containerd/conf.d/$NEW_EDITION-registry.toml fi EOFAfter the bashible synchronization completes (synchronization status on the nodes is shown by the

UPTODATEvalue in NodeGroup), delete the created NodeGroupConfiguration resource:d8 k delete ngc del-temp-config.sh

How to switch Deckhouse EE to CSE?

- The instructions assume the use of the public address of the container registry:

registry-cse.deckhouse.ru. If you use a different address of the container registry, change the commands or use the instructions for switching Deckhouse to use a third-party registry. - Deckhouse CSE does not support cloud clusters and some modules. For more information about supported modules, see the comparison of editions page.

- Migration to Deckhouse CSE is only possible from Deckhouse EE 1.58, 1.64 or 1.67.

- Current versions of Deckhouse CSE: 1.58.2 for release 1.58, 1.64.1 for release 1.64 and 1.67.0 for release 1.67. These versions will need to be used later to specify the

DECKHOUSE_VERSIONvariable. - The transition is only supported between the same minor versions, for example, from Deckhouse EE 1.64 to Deckhouse CSE 1.64. The transition from EE 1.58 to CSE 1.67 will require an intermediate migration: first to EE 1.64, then to EE 1.67, and only then to CSE 1.67. Attempts to upgrade the version to several releases at once may lead to cluster inoperability.

- Deckhouse CSE 1.58 and 1.64 support Kubernetes version 1.27, Deckhouse CSE 1.67 supports Kubernetes versions 1.27 and 1.29.

- When switching to Deckhouse CSE, temporary unavailability of cluster components is possible.

To switch a Deckhouse Enterprise Edition cluster to Certified Security Edition, follow these steps (all commands are executed on the cluster master node as a user with the kubectl context configured, or as the superuser):

How to switch using the registry module?

- Make sure that the cluster has been switched to use the

registrymodule. If the module is not used, go to the instructions. - Configure the cluster to use the desired Kubernetes version (information on versioning is provided in the How to switch Deckhouse EE to CSE section). To do this:

-

Run the command:

d8 platform edit cluster-configuration - Change the

kubernetesVersionparameter to the required value, for example,"1.27"(in quotation marks) for Kubernetes 1.27. - Save the changes. The cluster nodes will start updating sequentially.

- Wait for the update to complete. You can track the update progress with the

d8 k get nocommand. The update can be considered complete when the updated version appears in theVERSIONcolumn of each cluster node in the command output.

-

-

Prepare variables with license token:

LICENSE_TOKEN=<PUT_YOUR_LICENSE_TOKEN_HERE> -

Run a temporary pod of the new edition of Deckhouse to get the latest digests and a list of modules:

d8 k create secret docker-registry cse-image-pull-secret \ --docker-server=registry-cse.deckhouse.ru \ --docker-username=license-token \ --docker-password=${LICENSE_TOKEN} DECKHOUSE_VERSION=$(d8 k -n d8-system get deploy deckhouse -ojson | jq -r '.spec.template.spec.containers[] | select(.name == "deckhouse") | .image' | awk -F: '{print $NF}') d8 k run cse-image \ --image=registry-cse.deckhouse.ru/deckhouse/cse/install:$DECKHOUSE_VERSION \ --overrides="{\"spec\": {\"imagePullSecrets\":[{\"name\": \"cse-image-pull-secret\"}]}}" \ --command sleep -- infinityOnce the pod has entered the

Runningstatus, run the following commands:CSE_MODULES=$(d8 k exec cse-image -- ls -l deckhouse/modules/ | awk {'print $9'} |grep -oP "\d.*-\w*" | cut -c5-) USED_MODULES=$(d8 k get modules -o custom-columns=NAME:.metadata.name,SOURCE:.properties.source,STATE:.properties.state,ENABLED:.status.phase | grep Embedded | grep -E 'Enabled|Ready' | awk {'print $1'}) MODULES_WILL_DISABLE=$(echo $USED_MODULES | tr ' ' '\n' | grep -Fxv -f <(echo $CSE_MODULES | tr ' ' '\n')) -

Make sure that the modules used in the cluster are supported in Deckhouse CSE. For example, Deckhouse CSE 1.58 and 1.64 do not have the

cert-managermodule. Therefore, before disabling thecert-managermodule, it is necessary to switch the HTTPS mode of some components (for example,user-authnor prometheus) to alternative operating options, or change the global parameter responsible for the HTTPS mode in the cluster.You can display a list of modules that are not supported in Deckhouse CSE and will be disabled using the following command:

echo $MODULES_WILL_DISABLECheck the list and make sure that the functionality of the specified modules is not used in the cluster and you are ready to disable them.

Disable modules not supported in Deckhouse CSE:

echo $MODULES_WILL_DISABLE | tr ' ' '\n' | awk {'print "d8 k -n d8-system exec deploy/deckhouse -- deckhouse-controller module disable",$1'} | bashDeckhouse CSE does not support the earlyOOM feature. Disable it using setting.

Wait for the Deckhouse pod to become

Readyand all queued tasks to complete.d8 k -n d8-system exec -it svc/deckhouse-leader -c deckhouse -- deckhouse-controller queue listCheck that disabled modules have moved to the

Disabledstate.d8 k get modules -

Delete the created secret and pod:

d8 k delete pod/cse-image d8 k delete secret/cse-image-pull-secret -

Switch to the new edition. To do this, specify the following parameters in ModuleConfig

deckhouse(for detailed settings, see thedeckhousemodule configuration):--- # Example for Direct mode. apiVersion: deckhouse.io/v1alpha1 kind: ModuleConfig metadata: name: deckhouse spec: version: 1 enabled: true settings: registry: mode: Direct direct: # Relax mode is used to check if the current version of Deckhouse is in the specified registry. # To switch between editions, you must use this registry check mode. checkMode: Relax imagesRepo: registry-cse.deckhouse.ru/deckhouse/cse scheme: HTTPS # Specify your <LICENSE_TOKEN> parameter. license: <LICENSE_TOKEN> --- # Example for Unmanaged mode. apiVersion: deckhouse.io/v1alpha1 kind: ModuleConfig metadata: name: deckhouse spec: version: 1 enabled: true settings: registry: mode: Unmanaged unmanaged: # Relax mode is used to check if the current version of Deckhouse is in the specified registry. # To switch between editions, you must use this check mode. checkMode: Relax imagesRepo: registry-cse.deckhouse.ru/deckhouse/cse scheme: HTTPS # Specify your <LICENSE_TOKEN> parameter. license: <LICENSE_TOKEN> -

Wait for the registry to switch. To check if the switch has been completed, use the instructions.

Example output:

conditions: - lastTransitionTime: "..." message: |- Mode: Relax registry-cse.deckhouse.ru: all 1 items are checked reason: Ready status: "True" type: RegistryContainsRequiredImages # ... - lastTransitionTime: "..." message: "" reason: "" status: "True" type: Ready -

After switching, remove the

checkMode: Relaxparameter from thedeckhouseModuleConfig to enable the default check. Removing it will start checking for critical components in the registry. -

Wait until the check is completed. The registry mode switching status can be obtained using instructions.

Example output:

conditions: - lastTransitionTime: "..." message: |- Mode: Default registry-cse.deckhouse.ru: all 155 items are checked reason: Ready status: "True" type: RegistryContainsRequiredImages # ... - lastTransitionTime: "..." message: "" reason: "" status: "True" type: Ready -

Check if there are any pods left in the cluster with the registry address for Deckhouse EE:

For Unmanaged mode:

d8 k get pods -A -o json | jq -r '.items[] | select(.spec.containers[] | select(.image | contains("deckhouse.ru/deckhouse/ee"))) | .metadata.namespace + "\t" + .metadata.name' | sort | uniqFor other modes using a fixed address (this check does not take into account external modules):

# Get a list of current digests from the images_digests.json file inside Deckhouse. IMAGES_DIGESTS=$(d8 k -n d8-system exec -i svc/deckhouse-leader -c deckhouse -- cat /deckhouse/modules/images_digests.json | jq -r '.[][]' | sort -u) # Check if there are Pods using Deckhouse images at `registry.d8-system.svc:5001/system/deckhouse` # with a digest that is not in the list of current digests from IMAGES_DIGESTS. d8 k get pods -A -o json | jq -r --argjson digests "$(printf '%s\n' $IMAGES_DIGESTS | jq -R . | jq -s .)" ' .items[] | {name: .metadata.name, namespace: .metadata.namespace, containers: .spec.containers} | select(.containers != null) | select( .containers[] | select(.image | test("registry.d8-system.svc:5001/system/deckhouse") and test("@sha256:")) | .image as $img | ($img | split("@") | last) as $digest | ($digest | IN($digests[]) | not) ) | .namespace + "\t" + .name ' | sort -uIf the output contains

chronymodule pods, re-enable this module (in Deckhouse CSE this module is disabled by default):d8 k -n d8-system exec deploy/deckhouse -- deckhouse-controller module enable chrony

How to switch without using the registry module?

-

Before you begin, disable the

registrymodule using the instructions. - Configure the cluster to use the required Kubernetes version (information on versioning is provided in the instruction). To do this:

-

Run the command:

d8 platform edit cluster-configuration - Change the

kubernetesVersionparameter to the desired value, for example"1.27"(in quotes) for Kubernetes 1.27. - Save the changes. The cluster nodes will begin to be updated sequentially.

- Wait for the update to complete. You can track the update progress using the

d8 k get nocommand. The update is complete when the updated version appears in theVERSIONcolumn of each cluster node in the command output.

-

-

Prepare variables with the license token and create a NodeGroupConfiguration for transient authorization in

registry-cse.deckhouse.ru:Before creating a resource, please read the section How to add a configuration for an additional registry

LICENSE_TOKEN=<PUT_YOUR_LICENSE_TOKEN_HERE> AUTH_STRING="$(echo -n license-token:${LICENSE_TOKEN} | base64 )" d8 k apply -f - <<EOF --- apiVersion: deckhouse.io/v1alpha1 kind: NodeGroupConfiguration metadata: name: containerd-cse-config.sh spec: nodeGroups: - '*' bundles: - '*' weight: 30 content: | _on_containerd_config_changed() { bb-flag-set containerd-need-restart } bb-event-on 'containerd-config-file-changed' '_on_containerd_config_changed' mkdir -p /etc/containerd/conf.d bb-sync-file /etc/containerd/conf.d/cse-registry.toml - containerd-config-file-changed << "EOF_TOML" [plugins] [plugins."io.containerd.grpc.v1.cri"] [plugins."io.containerd.grpc.v1.cri".registry] [plugins."io.containerd.grpc.v1.cri".registry.mirrors] [plugins."io.containerd.grpc.v1.cri".registry.mirrors."registry-cse.deckhouse.ru"] endpoint = ["https://registry-cse.deckhouse.ru"] [plugins."io.containerd.grpc.v1.cri".registry.configs] [plugins."io.containerd.grpc.v1.cri".registry.configs."registry-cse.deckhouse.ru".auth] auth = "$AUTH_STRING" EOF_TOML EOFWait for synchronization to complete and for the

/etc/containerd/conf.d/cse-registry.tomlfile to appear on the nodes.The synchronization status can be tracked by the

UPTODATEvalue (the displayed number of nodes in this status should match the total number of nodes (NODES) in the group):d8 k get ng -o custom-columns=NAME:.metadata.name,NODES:.status.nodes,READY:.status.ready,UPTODATE:.status.upToDate -wExample output:

NAME NODES READY UPTODATE master 1 1 1 worker 2 2 2The bashible systemd service log should show the message

Configuration is in sync, nothing to doas a result of running the following command:journalctl -u bashible -n 5Example output:

Aug 21 11:04:28 master-ee-to-cse-0 bashible.sh[53407]: Configuration is in sync, nothing to do. Aug 21 11:04:28 master-ee-to-cse-0 bashible.sh[53407]: Annotate node master-ee-to-cse-0 with annotation node.deckhouse.io/configuration-checksum=9cbe6db6c91574b8b732108a654c99423733b20f04848d0b4e1e2dadb231206a Aug 21 11:04:29 master-ee-to-cse-0 bashible.sh[53407]: Successful annotate node master-ee-to-cse-0 with annotation node.deckhouse.io/configuration-checksum=9cbe6db6c91574b8b732108a654c99423733b20f04848d0b4e1e2dadb231206a Aug 21 11:04:29 master-ee-to-cse-0 systemd[1]: bashible.service: Deactivated successfully. -

Run the following commands to start a temporary Deckhouse CSE pod to get up-to-date digests and a list of modules:

DECKHOUSE_VERSION=v<DECKHOUSE_VERSION_CSE> # For example, DECKHOUSE_VERSION=v1.58.2. d8 k run cse-image --image=registry-cse.deckhouse.ru/deckhouse/cse/install:$DECKHOUSE_VERSION --command sleep -- infinityOnce the pod has entered the

Runningstatus, run the following commands:CSE_SANDBOX_IMAGE=$(d8 k exec cse-image -- cat deckhouse/candi/images_digests.json | grep pause | grep -oE 'sha256:\w*') CSE_K8S_API_PROXY=$(d8 k exec cse-image -- cat deckhouse/candi/images_digests.json | grep kubernetesApiProxy | grep -oE 'sha256:\w*') CSE_MODULES=$(d8 k exec cse-image -- ls -l deckhouse/modules/ | awk {'print $9'} |grep -oP "\d.*-\w*" | cut -c5-) USED_MODULES=$(d8 k get modules -o custom-columns=NAME:.metadata.name,SOURCE:.properties.source,STATE:.properties.state,ENABLED:.status.phase | grep Embedded | grep -E 'Enabled|Ready' | awk {'print $1'}) MODULES_WILL_DISABLE=$(echo $USED_MODULES | tr ' ' '\n' | grep -Fxv -f <(echo $CSE_MODULES | tr ' ' '\n')) CSE_DECKHOUSE_KUBE_RBAC_PROXY=$(d8 k exec cse-image -- cat deckhouse/candi/images_digests.json | jq -r ".common.kubeRbacProxy")An additional command that is only needed when switching to Deckhouse CSE version 1.64:

CSE_DECKHOUSE_INIT_CONTAINER=$(d8 k exec cse-image -- cat deckhouse/candi/images_digests.json | jq -r ".common.init") -

Make sure that the modules used in the cluster are supported by Deckhouse CSE. For example, Deckhouse CSE 1.58 and 1.64 do not have the

cert-managermodule. Therefore, before disabling thecert-managermodule, it is necessary to switch the HTTPS mode of some components (for example, user-authn or prometheus) to alternative operating options, or change the global parameter responsible for the HTTPS mode in the cluster.You can display a list of modules that are not supported in Deckhouse CSE and will be disabled using the following command:

echo $MODULES_WILL_DISABLECheck the list and make sure that the functionality of the specified modules is not used in the cluster and you are ready to disable them.

Disable modules not supported in Deckhouse CSE:

echo $MODULES_WILL_DISABLE | tr ' ' '\n' | awk {'print "d8 k -n d8-system exec deploy/deckhouse -- deckhouse-controller module disable",$1'} | bashDeckhouse CSE does not support the earlyOOM feature. Disable it using setting.

Wait until the Deckhouse pod becomes

Readyand all queued tasks are completed.d8 k -n d8-system exec -it svc/deckhouse-leader -c deckhouse -- deckhouse-controller queue listCheck that disabled modules have moved to the

Disabledstate.d8 k get modules -

Create a NodeGroupConfiguration:

d8 k apply -f - <<EOF apiVersion: deckhouse.io/v1alpha1 kind: NodeGroupConfiguration metadata: name: cse-set-sha-images.sh spec: nodeGroups: - '*' bundles: - '*' weight: 50 content: | _on_containerd_config_changed() { bb-flag-set containerd-need-restart } bb-event-on 'containerd-config-file-changed' '_on_containerd_config_changed' bb-sync-file /etc/containerd/conf.d/cse-sandbox.toml - containerd-config-file-changed << "EOF_TOML" [plugins] [plugins."io.containerd.grpc.v1.cri"] sandbox_image = "registry-cse.deckhouse.ru/deckhouse/cse@$CSE_SANDBOX_IMAGE" EOF_TOML sed -i 's|image: .*|image: registry-cse.deckhouse.ru/deckhouse/cse@$CSE_K8S_API_PROXY|' /var/lib/bashible/bundle_steps/051_pull_and_configure_kubernetes_api_proxy.sh sed -i 's|crictl pull .*|crictl pull registry-cse.deckhouse.ru/deckhouse/cse@$CSE_K8S_API_PROXY|' /var/lib/bashible/bundle_steps/051_pull_and_configure_kubernetes_api_proxy.sh EOFWait for bashible to complete synchronization on all nodes.

The synchronization status can be tracked by the

UPTODATEstatus value (the displayed number of nodes in this status should match the total number of nodes (NODES) in the group):d8 k get ng -o custom-columns=NAME:.metadata.name,NODES:.status.nodes,READY:.status.ready,UPTODATE:.status.upToDate -wThe bashible systemd service log on the nodes should show the message

Configuration is in sync, nothing to doas a result of running the following command:journalctl -u bashible -n 5Example output:

Aug 21 11:04:28 master-ee-to-cse-0 bashible.sh[53407]: Configuration is in sync, nothing to do. Aug 21 11:04:28 master-ee-to-cse-0 bashible.sh[53407]: Annotate node master-ee-to-cse-0 with annotation node.deckhouse.io/configuration-checksum=9cbe6db6c91574b8b732108a654c99423733b20f04848d0b4e1e2dadb231206a Aug 21 11:04:29 master-ee-to-cse-0 bashible.sh[53407]: Successful annotate node master-ee-to-cse-0 with annotation node.deckhouse.io/configuration-checksum=9cbe6db6c91574b8b732108a654c99423733b20f04848d0b4e1e2dadb231206a Aug 21 11:04:29 master-ee-to-cse-0 systemd[1]: bashible.service: Deactivated successfully. -

Update the Deckhouse CSE registry access secret by running the following command:

d8 k -n d8-system create secret generic deckhouse-registry \ --from-literal=".dockerconfigjson"="{\"auths\": { \"registry-cse.deckhouse.ru\": { \"username\": \"license-token\", \"password\": \"$LICENSE_TOKEN\", \"auth\": \"$AUTH_STRING\" }}}" \ --from-literal="address"=registry-cse.deckhouse.ru \ --from-literal="path"=/deckhouse/cse \ --from-literal="scheme"=https \ --type=kubernetes.io/dockerconfigjson \ --dry-run='client' \ -o yaml | d8 k -n d8-system exec -i svc/deckhouse-leader -c deckhouse -- d8 k replace -f - -

Change the Deckhouse image to the Deckhouse CSE image:

Command for Deckhouse CSE version 1.58:

d8 k -n d8-system set image deployment/deckhouse kube-rbac-proxy=registry-cse.deckhouse.ru/deckhouse/cse@$CSE_DECKHOUSE_KUBE_RBAC_PROXY deckhouse=registry-cse.deckhouse.ru/deckhouse/cse:$DECKHOUSE_VERSIONCommand for Deckhouse CSE version 1.64 and 1.67:

d8 k -n d8-system set image deployment/deckhouse init-downloaded-modules=registry-cse.deckhouse.ru/deckhouse/cse@$CSE_DECKHOUSE_INIT_CONTAINER kube-rbac-proxy=registry-cse.deckhouse.ru/deckhouse/cse@$CSE_DECKHOUSE_KUBE_RBAC_PROXY deckhouse=registry-cse.deckhouse.ru/deckhouse/cse:$DECKHOUSE_VERSION -

Wait for the Deckhouse pod to become

Readyand all tasks in the queue have completed. If anImagePullBackOfferror occurs during the process, wait for the pod to automatically restart.View Deckhouse pod status:

d8 k -n d8-system get po -l app=deckhouseCheck the status of the Deckhouse queue:

d8 k -n d8-system exec deploy/deckhouse -c deckhouse -- deckhouse-controller queue list -

Check if there are any pods left in the cluster with the registry address for Deckhouse EE:

d8 k get pods -A -o json | jq -r '.items[] | select(.spec.containers[] | select(.image | contains("deckhouse.ru/deckhouse/ee"))) | .metadata.namespace + "\t" + .metadata.name' | sort | uniqIf the output contains

chronymodule pods, re-enable this module (in Deckhouse CSE this module is disabled by default):d8 k -n d8-system exec deploy/deckhouse -- deckhouse-controller module enable chrony -

Clean up temporary files,

NodeGroupConfigurationresource and variables:rm /tmp/cse-deckhouse-registry.yaml d8 k delete ngc containerd-cse-config.sh cse-set-sha-images.sh d8 k delete pod cse-image d8 k apply -f - <<EOF apiVersion: deckhouse.io/v1alpha1 kind: NodeGroupConfiguration metadata: name: del-temp-config.sh spec: nodeGroups: - '*' bundles: - '*' weight: 90 content: | if [ -f /etc/containerd/conf.d/cse-registry.toml ]; then rm -f /etc/containerd/conf.d/cse-registry.toml fi if [ -f /etc/containerd/conf.d/cse-sandbox.toml ]; then rm -f /etc/containerd/conf.d/cse-sandbox.toml fi EOFAfter synchronization (the synchronization status on the nodes can be tracked by the

UPTODATEvalue of the NodeGroup), delete the created NodeGroupConfiguration resource:d8 k delete ngc del-temp-config.sh

How do I get access to Deckhouse controller in multimaster cluster?

In clusters with multiple master nodes Deckhouse runs in high availability mode (in several instances). To access the active Deckhouse controller, you can use the following command (as an example of the command deckhouse-controller queue list):

d8 k -n d8-system exec -it svc/deckhouse-leader -c deckhouse -- deckhouse-controller queue list

How do I upgrade the Kubernetes version in a cluster?

To upgrade the Kubernetes version in a cluster change the kubernetesVersion parameter in the ClusterConfiguration structure by making the following steps:

-

Run the command:

d8 platform edit cluster-configuration - Change the

kubernetesVersionfield. - Save the changes. Cluster nodes will start updating sequentially.

- Wait for the update to finish. You can track the progress of the update using the

d8 k get nocommand. The update is completed when the new version appears in the command’s output for each cluster node in theVERSIONcolumn.

How do I run Deckhouse on a particular node?

Set the nodeSelector parameter of the deckhouse module and avoid setting tolerations. The necessary values will be assigned to the tolerations parameter automatically.

Use only nodes with the CloudStatic or Static type to run Deckhouse. Also, avoid using a NodeGroup containing only one node to run Deckhouse.

Here is an example of the module configuration:

apiVersion: deckhouse.io/v1alpha1

kind: ModuleConfig

metadata:

name: deckhouse

spec:

version: 1

settings:

nodeSelector:

node-role.deckhouse.io/deckhouse: ""

How do I force IPv6 to be disabled on Deckhouse cluster nodes?

Internal communication between Deckhouse cluster components is performed via IPv4 protocol. However, at the operating system level of the cluster nodes, IPv6 is usually active by default. This leads to automatic assignment of IPv6 addresses to all network interfaces, including Pod interfaces. This results in unwanted network traffic - for example, redundant DNS queries like

AAAAA- which can affect performance and make debugging network communications more difficult.

To correctly disable IPv6 at the node level in a Deckhouse-managed cluster, it is sufficient to set the necessary parameters via the NodeGroupConfiguration resource:

apiVersion: deckhouse.io/v1alpha1

kind: NodeGroupConfiguration

metadata:

name: disable-ipv6.sh

spec:

nodeGroups:

- '*'

bundles:

- '*'

weight: 50

content: |

GRUB_FILE_PATH="/etc/default/grub"

if ! grep -q "ipv6.disable" "$GRUB_FILE_PATH"; then

sed -E -e 's/^(GRUB_CMDLINE_LINUX_DEFAULT="[^"]*)"/\1 ipv6.disable=1"/' -i "$GRUB_FILE_PATH"

update-grub

bb-flag-set reboot

fi

After applying the resource, the GRUB settings will be updated and the cluster nodes will begin a sequential reboot to apply the changes.

How do I change container runtime to containerd v2 on nodes?

Deckhouse Kubernetes Platform automatically checks cluster nodes for compliance with the conditions for migration to containerd v2:

- Nodes meet the requirements described in general cluster parameters.

- The server has no custom configurations in

/etc/containerd/conf.d(example custom configuration).

If any of the requirements described in the general cluster parameters are not met, Deckhouse Kubernetes Platform adds the label node.deckhouse.io/containerd-v2-unsupported to the node. If the node has custom configurations in /etc/containerd/conf.d, the label node.deckhouse.io/containerd-config=custom is added to it.

If one of these labels is present, changing the spec.cri.type parameter for the node group will be unavailable. Nodes that do not meet the migration conditions can be viewed using the following commands:

kubectl get node -l node.deckhouse.io/containerd-v2-unsupported

kubectl get node -l node.deckhouse.io/containerd-config=custom

Additionally, a administrator can verify if a specific node meets the requirements using the following commands:

uname -r | cut -d- -f1

stat -f -c %T /sys/fs/cgroup

systemctl --version | awk 'NR==1{print $2}'

modprobe -qn erofs && echo "TRUE" || echo "FALSE"

ls -l /etc/containerd/conf.d

You can migrate to containerd v2 in one of the following ways:

- By specifying the value

ContainerdV2for thedefaultCRIparameter in the general cluster parameters. In this case, the container runtime will be changed in all node groups, unless where explicitly defined using thespec.cri.typeparameter. - By specifying the value

ContainerdV2for thespec.cri.typeparameter for a specific node group.

After changing parameter values to ContainerdV2, Deckhouse Kubernetes Platform will begin sequentially updating the nodes. Updating a node results in the disruption of the workload hosted on it (disruptive update). The node update process is managed by the parameters for applying disruptive updates to the node group (spec.disruptions.approvalMode).

At migration process the folder /var/lib/containerd will be cleared, causing all pod images to be re-downloaded, and the node will reboot.