Available in: EE

This module provides CSI that manages volumes based on S3 storages. It uses geeseFS - FUSE file system based on S3.

The module allows you to create StorageClass and Secret in Kubernetes by creating Kubernetes custom resource S3StorageClass.

System requirements

- Kubernetes 1.17+

- Kubernetes must allow privileged containers

- Deployed and configured S3-storage with access keys.

- Enough memory on nodes. GeeseFS uses cache for uploading/downloading files from and to S3 storage. Its size is defined by

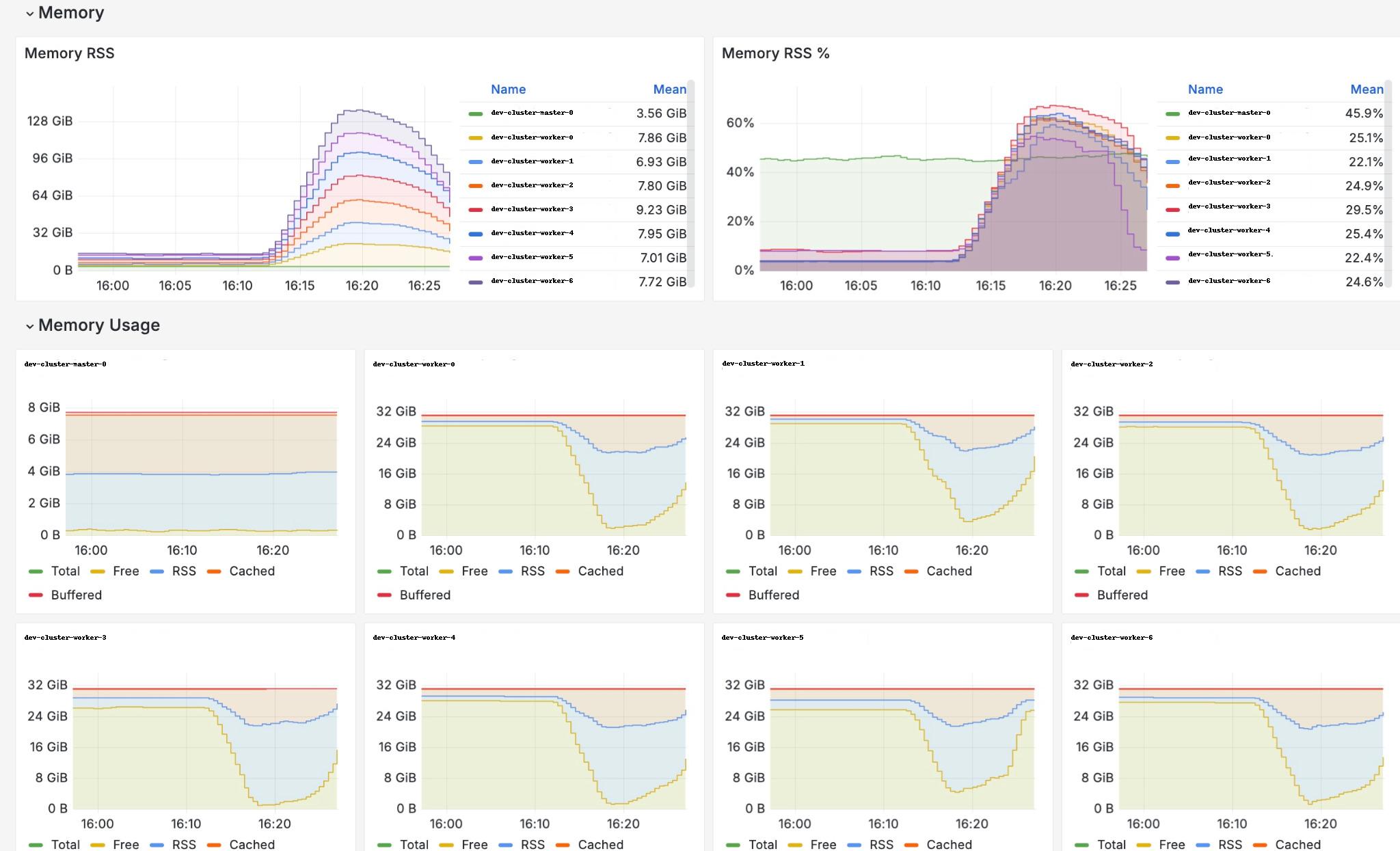

maxCacheSizeparameter inS3StorageClass. Here are stress test results in the following conditions: 7 nodes, 600 pods and PVCs, maxCacheSize 500 megabytes, each pod creates 300MB file, reads it and then gets completed:

Quickstart guide

Note that all commands must be run on a machine that has administrator access to the Kubernetes API.

Usage steps:

- Enabling module

- Creating S3StorageClass

Enabling module

- Enable the

csi-s3module. This will result in the following actions across all cluster nodes:- registration of the CSI driver;

- launch of service pods for the

csi-s3components.

kubectl apply -f - <<EOF

apiVersion: deckhouse.io/v1alpha1

kind: ModuleConfig

metadata:

name: csi-s3

spec:

enabled: true

version: 1

EOF

- Wait for the module to become

Ready.

kubectl get module csi-s3 -w

Creating StorageClass

To create a StorageClass you need to use the S3StorageClass resource. Here is an example of a command to create such resource:

apiVersion: storage.deckhouse.io/v1alpha1

kind: S3StorageClass

metadata:

name: example-s3

spec:

bucketName: example-bucket

endpoint: https://s3.example.com

region: us-east-1

accessKey: <your-access-key>

secretKey: <your-secret-key>

maxCacheSize: 500

insecure: false

If bucketName is empty then bucket in S3 will be created for each PV. If bucketName is not empty then folder inside the bucket will be created for each PV. If the specifies bucket does not exist it will be created. insecure: true enables ignoring of SSL-certificates erros - we do not recommend to use the option, it’s not safe.

Checking module health

You can verify the functionality of the module using the instructions here

Known limitations of GeeseFS mounter

As S3 is not a real file system there are some limitations to consider here. Depending on what mounter you are using, you will have different levels of POSIX compability. Also depending on what S3 storage backend you are using there are not always consistency guarantees, see here: https://github.com/gaul/are-we-consistent-yet#observed-consistency

You can check POSIX compatibility matrix here: https://github.com/yandex-cloud/geesefs#posix-compatibility-matrix.

Actual limitations:

- File mode/owner/group, symbolic links, custom mtimes and special files (block/character devices, named pipes, UNIX sockets) are not supported because standard S3 doesn’t return user metadata in listings and reading all this metadata in standard S3 would require an additional HEAD request for every file in listing which would make listings too slow.

- Special file support is enabled by default for Yandex S3 and disabled for others.

- File mode/owner/group are disabled by default

- Custom modification times are also disabled by default: ctime, atime and mtime are always the same file modification time can’t be set by user (for example with cp –preserve, rsync -a or utimes(2))

- Does not support hard links

- Does not support locking

- Does not support “invisible” deleted files. If an app keeps an opened file descriptor after deleting the file it will get ENOENT errors from FS operations

- Default file size limit is 1.03 TB, achieved by splitting the file into 1000x 5MB parts, 1000x 25 MB parts and 8000x 125 MB parts. You can change part sizes, but AWS’s own limit is anyway 5 TB.

Known bugs

- Storage request size in PVC does not reflect on created buckets

df -hshows 1 petabyte of mount size andusedfield does not change during usage- CSI doesn’t check credentials during mount and pod will have

Runningstatus, PV,PVC will beBoundeven if credentials are wrong. Attempt to access mounted folder in pod will result in pod crush.