Available in editions: CE, BE, SE, SE+, EE

The module lifecycle stage: General Availability

How to use extended-monitoring-exporter

Attach the extended-monitoring.deckhouse.io/enabled label to the Namespace to enable the export of extended monitoring metrics. You can do it by:

- adding the appropriate helm-chart to the project (recommended method);

- adding it to

.gitlab-ci.yml(kubectl patch/create); - attaching it manually (

d8 k label namespace my-app-production extended-monitoring.deckhouse.io/enabled=""). - configuring via namespace-configurator module.

Any of the methods above would result in the emergence of the default metrics (+ any custom metrics with the threshold.extended-monitoring.deckhouse.io/ prefix) for all supported Kubernetes objects in the target namespace. Note that monitoring is enabled automatically for a number of non-namespaced Kubernetes objects described below.

You can also add custom labels with the specified value to threshold.extended-monitoring.deckhouse.io/something Kubernetes objects, e.g., d8 k label pod test threshold.extended-monitoring.deckhouse.io/disk-inodes-warning=30.

In this case, the label value will replace the default one.

If you want to override the default thresholds for all objects in a namespace, you can set the threshold.extended-monitoring.deckhouse.io/ label at the namespace level. For example:

d8 k label namespace my-app-production threshold.extended-monitoring.deckhouse.io/5xx-warning=20

This will replace the default value for all objects in the namespace that do not already have this label set.

You can disable monitoring on a per-object basis by adding the extended-monitoring.deckhouse.io/enabled=false label to it. Thus, the default labels will also be disabled (as well as label-based alerts).

Standard labels and supported Kubernetes objects

Below is the list of labels used in Prometheus Rules and their default values.

Note, that all the labels start with the threshold.extended-monitoring.deckhouse.io/ prefix. The value specified in a label is a number that sets the alert trigger threshold.

For example, the label threshold.extended-monitoring.deckhouse.io/5xx-warning: "5" on the Ingress resource changes the alert threshold from 10% (default) to 5%.

Non-namespaced Kubernetes objects

Non-namespaced Kubernetes objects do not need labels on the namespace, and monitoring on them is enabled by default when the module is enabled.

Node

| Label | Type | Default value |

|---|---|---|

| disk-bytes-warning | int (percent) | 70 |

| disk-bytes-critical | int (percent) | 80 |

| disk-inodes-warning | int (percent) | 90 |

| disk-inodes-critical | int (percent) | 95 |

| load-average-per-core-warning | int | 3 |

| load-average-per-core-critical | int | 10 |

Caution! These labels do not apply to

imagefs(/var/lib/dockerby default) andnodefs(/var/lib/kubeletby default) volumes. The thresholds for these volumes are configured completely automatically according to the kubelet’s eviction thresholds. The default values are available here; for more info, see the exporter.

Namespaced Kubernetes objects

Pod

| Label | Type | Default value |

|---|---|---|

| disk-bytes-warning | int (percent) | 85 |

| disk-bytes-critical | int (percent) | 95 |

| disk-inodes-warning | int (percent) | 85 |

| disk-inodes-critical | int (percent) | 90 |

Ingress

| Label | Type | Default value |

|---|---|---|

| 5xx-warning | int (percent) | 10 |

| 5xx-critical | int (percent) | 20 |

Deployment

| Label | Type | Default value |

|---|---|---|

| replicas-not-ready | int (count) | 0 |

The threshold implies the number of unavailable replicas in addition to maxUnavailable. This threshold will be triggered if the number of unavailable replicas is greater than maxUnavailable by the amount specified. Suppose replicas-not-ready is 0. In this case, the threshold will be triggered if the number of unavailable replicas is greater than maxUnavailable. If replicas-not-ready is set to 1, then the threshold will be triggered if the number of unavailable replicas is greater than maxUnavailable + 1. This way, you can fine-tune this parameter for specific Deployments (that may be unavailable) in the namespace with the extended monitoring enabled to avoid getting excessive alerts.

StatefulSet

| Label | Type | Default value |

|---|---|---|

| replicas-not-ready | int (count) | 0 |

The threshold implies the number of unavailable replicas in addition to maxUnavailable (see the comments on Deployment).

DaemonSet

| Label | Type | Default value |

|---|---|---|

| replicas-not-ready | int (count) | 0 |

The threshold implies the number of unavailable replicas in addition to maxUnavailable (see the comments on Deployment).

CronJob

Note that only the deactivation using the extended-monitoring.deckhouse.io/enabled=false label is supported.

How does it work?

The module exports specific Kubernetes object labels to Prometheus. It allows you to improve Prometheus rules by adding the thresholds for triggering alerts. Using metrics that this module exports, you can, e.g., replace the “magic” constants in rules.

Before:

(

kube_statefulset_status_replicas - kube_statefulset_status_replicas_ready

)

> 1

After:

(

kube_statefulset_status_replicas - kube_statefulset_status_replicas_ready

)

> on (namespace, statefulset)

(

max by (namespace, statefulset) (extended_monitoring_statefulset_threshold{threshold="replicas-not-ready"})

)

The module has 58 alerts.

The module is enabled by default in the following bundles: Default, Managed.

The module is disabled by default in the Minimal bundle.

Requirements

To the versions of other modules:

-

prometheus: any version.

Conversions

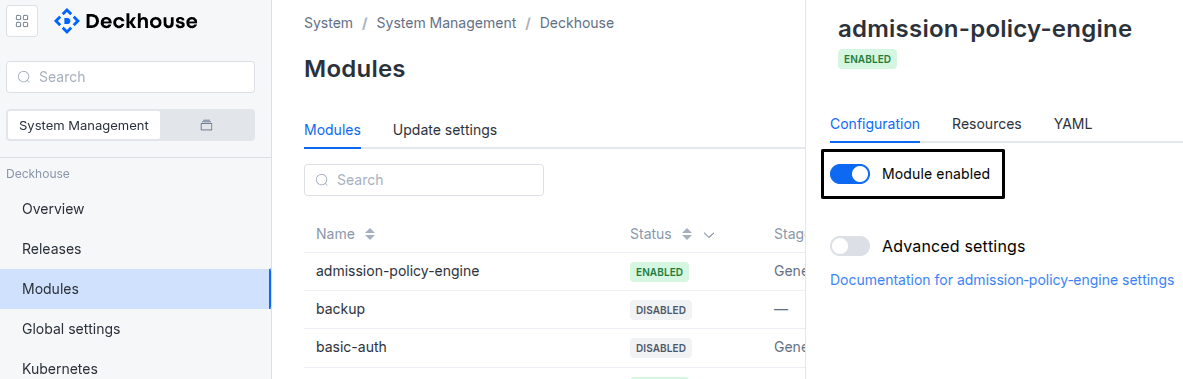

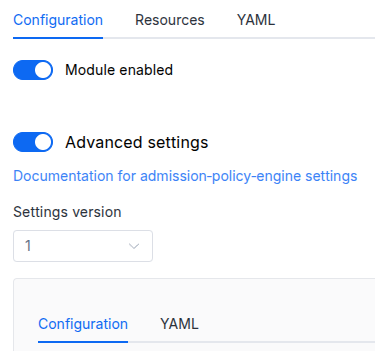

The module is configured using the ModuleConfig resource, the schema of which contains a version number. When you apply an old version of the ModuleConfig schema in a cluster, automatic transformations are performed. To manually update the ModuleConfig schema version, the following steps must be completed sequentially for each version :

- Updates from version 1 to 2:

If the

.imageAvailability.skipRegistryCertVerificationfield is set totrue, add.imageAvailability.registry.tlsConfig.insecureSkipVerify=true. Then, delete theskipRegistryCertVerificationfield from the object. If the.imageAvailabilityobject becomes empty after this change, delete it.

Parameters

Schema version: 2

- objectsettings

- objectsettings.certificates

Settings for monitoring the certificates in the Kubernetes cluster.

- booleansettings.certificates.exporterEnabled

Enables x509-certificate-exporter.

Default:

false

- objectsettings.events

Settings for monitoring the events in the Kubernetes cluster.

- booleansettings.events.exporterEnabled

Enables eventsExporter.

Default:

false - stringsettings.events.severityLevel

Whether to expose only crucial events.

Default:

OnlyWarningsAllowed values:

All,OnlyWarnings

- objectsettings.imageAvailability

Settings for monitoring the availability of images in the cluster.

- booleansettings.imageAvailability.exporterEnabled

Enables imageAvailabilityExporter.

Default:

true - array of stringssettings.imageAvailability.forceCheckDisabledControllers

A list of controller kinds for which image is forcibly checked, even when workloads are disabled or suspended.

Specify

Allto check all controller kinds.Example:

forceCheckDisabledControllers: - Deployment - StatefulSet- stringElement of the array

Allowed values:

Deployment,StatefulSet,DaemonSet,CronJob,All

- array of stringssettings.imageAvailability.ignoredImages

A list of images to ignore when checking the presence in the registry, e.g.,

alpine:3.12orquay.io/test/test:v1.1.Example:

ignoredImages: - alpine:3.10 - alpine:3.2 - array of objectssettings.imageAvailability.mirrors

List of mirrors for container registries.

Example:

mirrors: - original: docker.io mirror: mirror.gcr.io - original: internal-registry.com mirror: mirror.internal-registry.com- stringsettings.imageAvailability.mirrors.mirror

Required value

- stringsettings.imageAvailability.mirrors.original

Required value

- objectsettings.imageAvailability.registry

Connection settings for container registry.

- stringsettings.imageAvailability.registry.scheme

Container registry access scheme.

Default:

HTTPSAllowed values:

HTTP,HTTPS - objectsettings.imageAvailability.registry.tlsConfig

Connection settings for container registry.

- stringsettings.imageAvailability.registry.tlsConfig.ca

Root CA certificate to validate the container registry’s HTTPS certificate (if self-signed certificates are used).

- booleansettings.imageAvailability.registry.tlsConfig.insecureSkipVerify

Whether to skip the verification of the container registry certificate.

Default:

false

- objectsettings.nodeSelector

The same as in the pods’

spec.nodeSelectorparameter in Kubernetes.If the parameter is omitted or

false, it will be determined automatically. - array of objectssettings.tolerations

The same as in the pods’

spec.tolerationsparameter in Kubernetes;If the parameter is omitted or

false, it will be determined automatically.- stringsettings.tolerations.effect

- stringsettings.tolerations.key

- stringsettings.tolerations.operator

- integersettings.tolerations.tolerationSeconds

- stringsettings.tolerations.value