Available in some commercial editions: EE

Deckhouse supports working with S3-based object storage, enabling its use in Kubernetes for storing data as volumes. The GeeseFS file system is used, running via FUSE on top of S3, allowing S3 storage to be mounted as standard file systems.

This page provides instructions for setting up S3 storage in Deckhouse, including connection, creating a StorageClass, and verifying system functionality.

System Requirements

- Kubernetes version 1.17+ with support for privileged containers.

- A configured S3 storage with available access keys.

- Sufficient memory on nodes.

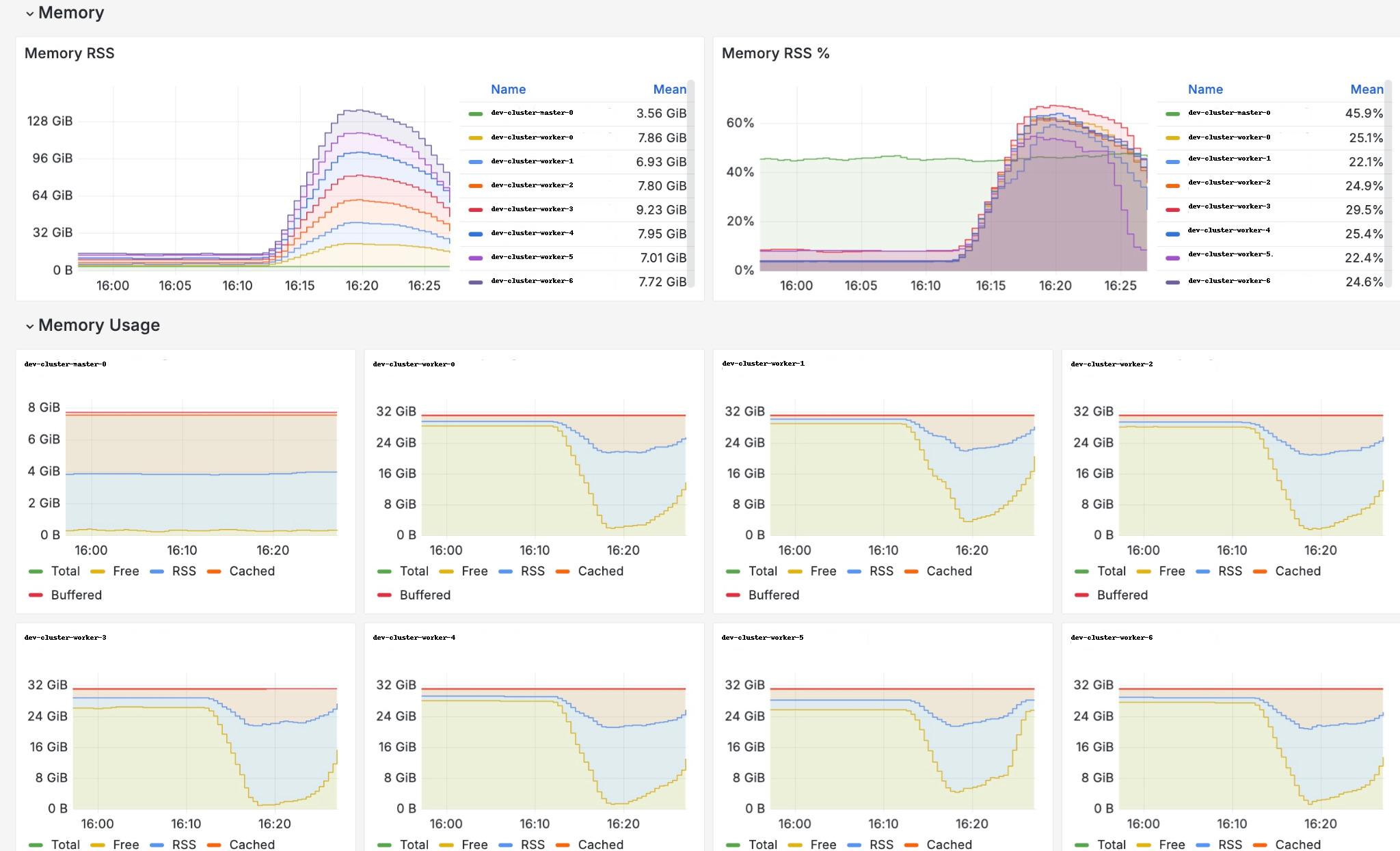

GeeseFSuses caching for working with files retrieved from S3. The cache size is set using themaxCacheSizeparameter in S3StorageClass. Stress test results: 7 nodes, 600 pods and PVCs,maxCacheSize= 500 MB, each pod writes 300 MB, reads it, and then terminates.

Setup and Configuration

Note that all commands must be executed on a machine with administrative privileges in the Kubernetes API.

Required steps:

- Enable the module;

- Create S3StorageClass.

Enabling the module

To support working with S3 storage, enable the csi-s3 module, which allows the creation of StorageClass and Secret in Kubernetes using custom resources like S3StorageClass.

After enabling the module, the following will occur on cluster nodes:

- CSI driver registration;

- Launch of

csi-s3service pods and creation of necessary components.

d8 k apply -f - <<EOF

apiVersion: deckhouse.io/v1alpha1

kind: ModuleConfig

metadata:

name: csi-s3

spec:

enabled: true

version: 1

EOF

Wait for the module to transition to the Ready state.

d8 k get module csi-s3 -w

Creating StorageClass

The module is configured via the S3StorageClass manifest. Below is an example configuration:

apiVersion: storage.deckhouse.io/v1alpha1

kind: S3StorageClass

metadata:

name: example-s3

spec:

bucketName: example-bucket

endpoint: https://s3.example.com

region: us-east-1

accessKey: <your-access-key>

secretKey: <your-secret-key>

maxCacheSize: 500

insecure: false

If bucketName is empty then bucket in S3 will be created for each PV. If bucketName is not empty then folder inside the bucket will be created for each PV. If the specifies bucket does not exist it will be created.

Checking module health

To verify the module’s functionality, check the status of the pods in the d8-csi-s3 namespace. Use the following command:

d8 k -n d8-csi-s3 get pod -owide -w

All pods should have the status Running or Completed and should be deployed across all nodes.

Known limitations of GeeseFS mounter

S3 is not a traditional file system, so it comes with several limitations. POSIX compatibility depends on the mounting module used and the specific S3 provider. Some storage backends may not guarantee data consistency. More details can be found here.

You can check the POSIX compatibility matrix here.

Key limitations:

- File permissions, symbolic links, user-defined

mtimes, and special files (block/character devices, named pipes, UNIX sockets) are not supported. - Special file support is enabled by default for

Yandex S3but disabled for other providers. - File permissions are disabled by default.

- User-defined modification times are also disabled:

ctime,atime, andmtimeare always the same. - The file modification time cannot be set manually (e.g., using

cp --preserve,rsync -a, orutimes(2)). - Hard links are not supported.

- File locking is not supported.

- “Invisible” deleted files are not retained. If an application keeps an open file descriptor after deleting a file, it will receive

ENOENTerrors when trying to access it. - The default file size limit is 1.03 TB, achieved through chunking: 1000 parts of 5 MB, 1000 parts of 25 MB, and 8000 parts of 125 MB. The chunk size can be adjusted, but AWS enforces a maximum file size of 5 TB.

Known bugs

- The requested PVC volume size does not affect the created S3 bucket.

df -halways reports the mounted storage size as 1 PB, anduseddoes not change during usage.- The CSI driver does not validate storage access credentials. Even with incorrect keys, the pod will remain in

Runningstatus, and PersistentVolume and PersistentVolumeClaim will beBound. Any attempt to access the mounted directory within the pod will result in the pod restarting.